Navigating Observability: Logs, Metrics, and Traces Explained

Navigating Observability: Logs, Metrics, and Traces Explained

Imagine you're the captain of a spaceship, navigating through the vast expanse of the digital universe. Your mission: to ensure your systems are running smoothly and efficiently. But in this complex, ever-changing landscape, how do you maintain clear visibility and stay in control? Fear not, for your trusty crew - the three pillars of observability: logs, metrics, and traces - is ready to guide you through the cosmos of modern software architecture.

Introduction to Observability

Observability, a concept rooted in control theory, has taken the IT world by storm. Observability is the ability to infer the internal state of a system based on its external outputs.

It goes beyond traditional monitoring, offering a holistic view of your system's health and performance. While monitoring focuses on predefined metrics and alerts, observability enables proactive exploration and discovery of unknowns.

As businesses embrace digital transformation and shift towards cloud-native architectures, observability becomes the north star, illuminating the path to success.

So, how is it different from traditional IT monitoring, and what are its key components?

- Comparative Analysis with IT Monitoring: Traditional IT monitoring relies on predefined dashboards and alerts, while observability empowers teams to ask new questions and explore system behavior in real time.

- Importance of Metrics, Logs, and Traces: These pillars provide granular insights into system performance, enabling teams to detect anomalies, diagnose issues, and optimize resources in cloud infrastructures.

Let’s expand further on metrics, logs, and traces, which are better known as the three pillars of observability.

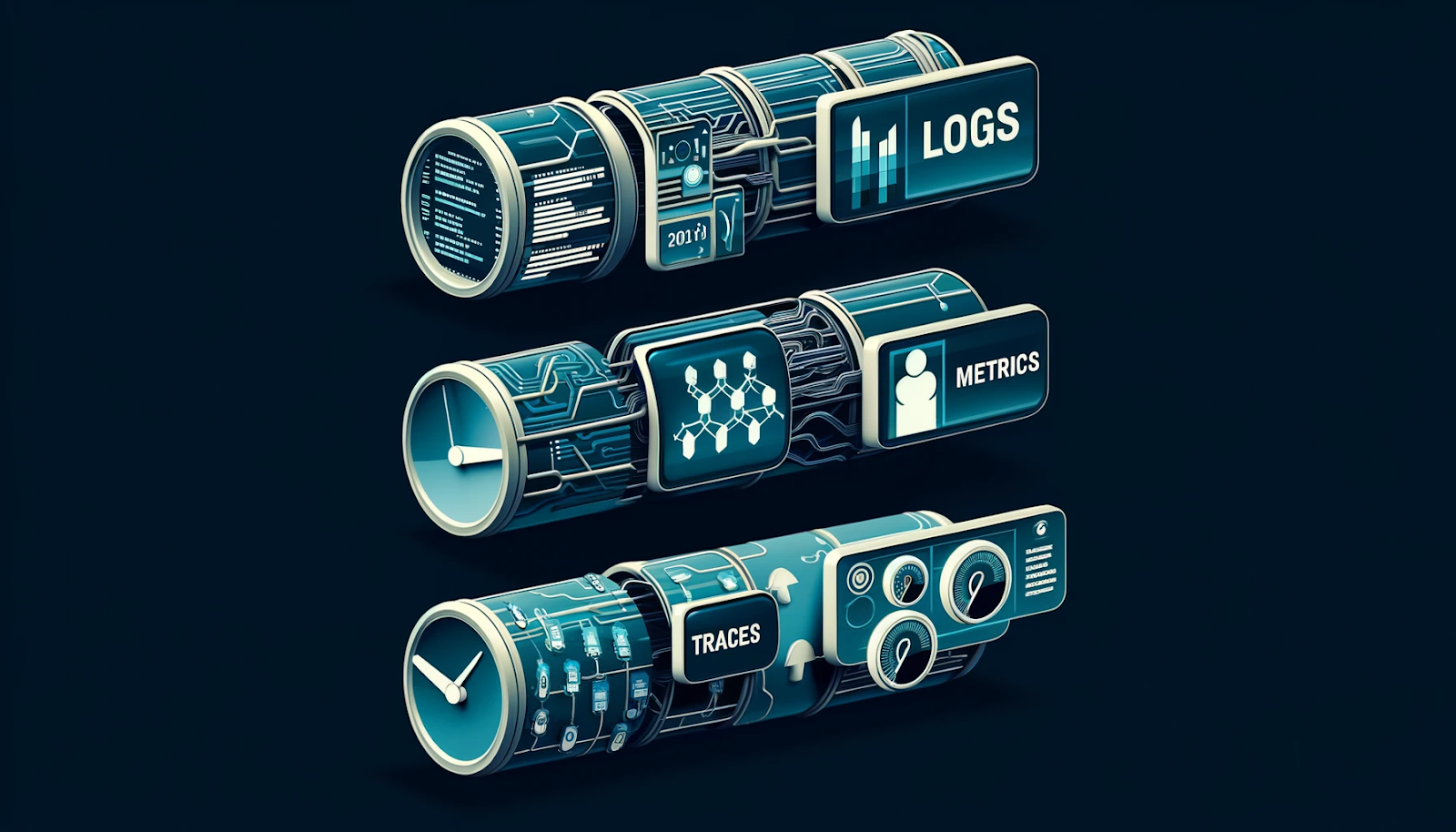

The Three Pillars of Observability

Just as a tripod provides stability, the three pillars of observability - logs, metrics, and traces - form a solid foundation for understanding your system's behavior. As you venture into the vastness of logs, metrics, and traces, consider leveraging a comprehensive tool that can simplify the management and analysis of your observability data.

- Logs: Detailed records of events that provide a granular view of system activity.

- Metrics: Numeric measurements that quantify the health and performance of your services.

- Traces: End-to-end maps of requests as they flow through your distributed system.

These pillars interact and complement each other in observability frameworks:

- Logs provide context for metrics spikes and trace anomalies

- Metrics can trigger alerts that guide log analysis

- Traces can uncover the source of logged errors and performance bottlenecks

They offer a comprehensive view of your system's health, performance, and dependencies.

The First Pillar: Exploring Logs

Logs are the lifeblood of observability, pumping rich contextual data through your system's veins. They come in various formats:

- Plaintext Logs: Unstructured, human-readable event records

- Structured Logs: Machine-parsable logs, often in JSON format

- Binary Logs: Compact, encoded logs optimized for storage and processing

Generating meaningful logs requires careful instrumentation of your application code:

- Capture key event details like timestamps, severity levels, and context

- Use consistent log levels (debug, info, warn, error) to control verbosity

- Implement centralized log aggregation and analysis for a unified view

Logs excel at providing granular insights for debugging, but their high volume can pose management challenges. Efficient indexing, storage, and querying are essential to extracting valuable insights from the noise. Best practices include adopting a structured logging format, using meaningful log messages, and leveraging log analytics tools to derive actionable intelligence.

Platforms like OpenObserve, with their efficient storage and advanced data manipulation using VRL (Vector Remap Language), can significantly streamline your log management efforts. This allows you to focus on deriving actionable insights rather than managing data overload.

Now that we've untangled the web of logs let's zoom out and use metrics to check our system's heartbeat.

The Second Pillar: Understanding Metrics

Metrics are your system's vital signs, providing a continuous pulse of its health and performance. They are lightweight numeric values that allow you to track and alert on critical indicators such as latency, error rates, and resource utilization.

The power of metrics lies in their ability to surface high-level trends and anomalies that may be absent from individual log entries. They enable you to:

- Set performance baselines and define SLAs

- Monitor system health and identify degradations

- Trigger automated alerts and drive capacity planning

However, tracking metrics across multiple services and dimensions in complex distributed systems can be challenging. Effective metric design, aggregation, and correlation are crucial for deriving meaningful insights.

While metrics provide a bird's-eye view, logs offer a microscopic lens into system behavior. Used together, they form a powerful observability duo. With our high-level view mapped out with metrics, it's time to connect the dots and trace the footsteps of our data across services.

The Third Pillar: Tracing in Detail

Distributed tracing is the glue that binds your observability story together. By assigning a unique identifier to each request, traces allow you to follow its journey across service boundaries, revealing application flow, performance bottlenecks, and errors.

Traces provide a unified view of system behavior, connecting disparate events into a coherent narrative. They offer several key advantages:

- Visualize end-to-end request flows and service dependencies

- Identify performance bottlenecks and latency sources

- Correlate errors and exceptions across distributed services

- Understand resource consumption and optimize system efficiency

Integrating your observability practice with OpenObserve can further enhance your tracing capabilities, offering seamless integration with service meshes and advanced analytics for an in-depth understanding of system behavior.

Integrating tracing with service meshes like Istio takes observability to the next level, enabling advanced features like traffic monitoring and distributed debugging.

In contrast to logs, which capture discrete events, traces stitch together these events into a complete narrative, providing a holistic view of your system's behavior.

Having followed our data's journey, let's take a step back and see how a tool like OpenObserve can simplify this complex expedition.

OpenObserve and Observability

On this journey, OpenObserve is a recommended companion. This open-source observability platform simplifies the collection and analysis of logs, metrics, and traces, empowering you with deep system visibility.

OpenObserve provides a consistent data model and APIs for ingesting and querying observability data, reducing complexity and vendor lock-in. It offers out-of-the-box integrations with popular observability tools, making it easy to incorporate into your existing ecosystem.

OpenObserve's powerful query language and built-in anomaly detection enable you to derive deep insights and automate problem resolution. Its user-friendly GUI and support for SQL queries make it an ideal companion for both seasoned professionals and those new to observability, facilitating easy exploration and discovery of unknowns.

When equipped with the right companion, it becomes easier to navigate through potential pitfalls with some tried and tested practices.

Challenges and Best Practices

Who says you can't navigate the complexity of IT? It's like trying to solve a Rubik's Cube in the dark—frustrating but oddly satisfying when you get it right.

While observability is a game-changer, it's not without its challenges. Common pitfalls include data overload, siloed tools, and the need for more standardization.

To overcome these hurdles:

- Adopt consistent naming conventions and data models across your observability stack.

- Leverage AIOps tools for intelligent, alert correlation and root cause analysis.

- Embrace open standards like OpenTelemetry for vendor-neutral instrumentation.

- Foster a culture of collaboration and continuous improvement.

Utilizing OpenObserve, which integrates effortlessly with primary storage services and supports open standards, can mitigate the risk of vendor lock-in, ensuring your observability practice remains robust and flexible.

For deploying an effective observability strategy, essential guidelines include:

- Integrate observability into your system design from the start

- Capture data in real time to enable proactive monitoring

- Establish transparent processes for incident response and postmortems

- Continuously refine your observability practice based on learnings

Armed with best practices, let's gear up with the right tools to amplify our journey toward observability excellence.

Leveraging Observability Tools

You need the right tools in your observability arsenal to go where no one has gone before boldly. Logging as a service (LaaS) platforms, application performance monitoring (APM) solutions, and service meshes are essential for comprehensive visibility.

LaaS and APM tools streamline log management and provide deep application insights, enabling you to detect and resolve issues faster. InfluxDB 3.0, a time series database optimized for storing and querying metrics and events, is a critical component of the observability stack.

As systems grow more complex, AIOps tools that leverage machine learning for anomaly detection and root cause analysis become indispensable. Automating alert correlation and problem diagnosis helps you focus on high-impact issues and reduces the time needed for resolution.

By including OpenObserve in your observability arsenal, you benefit from up to 140x lower storage costs than Elasticsearch alone, freeing up resources to invest in other areas of your digital transformation journey.

With our toolbelt fully stocked, let's take a moment to reflect on how far we've come and where we're going in the observability universe.

Conclusion

In the fast-paced digital universe, observability is your beacon of light, guiding you through the challenges of modern software development. You can gain deep visibility into your system's behavior by harnessing the power of logs, metrics, and traces, ensuring optimal performance and reliability.

Remember, observability is not a destination but a journey of continuous improvement. As you explore new frontiers and face new challenges, let OpenObserve be your co-pilot, helping you navigate the complexities of the observability landscape.

So, dear captain, chart your course, trust your observability crew, and boldly embrace the future of software excellence.

The stars are the limit!

Author:

The OpenObserve Team comprises dedicated professionals committed to revolutionizing system observability through their innovative platform, OpenObserve. Dedicated to streamlining data observation and system monitoring, offering high performance and cost-effective solutions for diverse use cases.