Open Source Tools for Data Observability

Introduction to Open Source Data Observability

Ensuring that your systems are running smoothly and efficiently is critical. Data observability is a key component of this process, allowing you to monitor, track, and analyze your data across your infrastructure. It provides insights into system performance, helps identify issues before they become critical problems, and ensures that data flows seamlessly.

Definition and Importance of Data Observability

Data observability refers to the comprehensive monitoring and analysis of data across all stages of its life cycle within your systems. It encompasses various aspects such as metrics, logs, traces, and events, offering a holistic view of your data environment. This observability is essential for:

- Performance Optimization: Ensuring systems are operating efficiently and identifying bottlenecks.

- Issue Detection and Resolution: Quickly detecting and resolving issues before they impact end-users.

- Compliance and Security: Monitoring data access and usage to ensure compliance with regulations and to identify potential security breaches.

- Operational Insights: Gaining insights into operational trends and making informed decisions based on data.

The Role of Open Source in Enhancing Data Observability

Open source tools have revolutionized data observability by providing powerful, flexible, and cost-effective solutions. Unlike proprietary tools, open source observability tools offer:

- Cost-Effectiveness: They eliminate the high licensing fees associated with vendor-managed solutions, making advanced observability accessible to organizations of all sizes.

- Flexibility and Customizability: These tools can be tailored to fit specific needs, ensuring seamless integration with existing systems and workflows.

- Community Support and Innovation: Open source projects benefit from community-driven improvements and a wealth of shared knowledge, ensuring rapid advancements and extensive support.

In this blog, we'll explore some of the top open source data observability tools, and delve into their unique features and integration capabilities. Whether you're looking to enhance performance, ensure compliance, or gain operational insights, these tools can help you achieve comprehensive data observability.

Key Open Source Data Observability Tools

Choosing the right data observability tool is crucial for maintaining a robust and efficient data infrastructure. Each tool has its unique strengths and features, making it essential to understand what each offers and how it can fit into your specific environment. Here’s an overview of some of the most powerful open source tools available for data observability.

Overview and Significance of Selecting the Right Tool

Here are some key considerations when selecting a data observability tool:

- Compatibility: Ensure the tool integrates seamlessly with your existing systems and data sources.

- Scalability: The tool should be able to scale with your data volume and system complexity.

- Customization: Look for tools that offer customizable dashboards and alerts to fit your specific needs.

- Community and Support: A strong community and good support resources are vital for troubleshooting and extending the tool’s functionality.

- Great Expectations

Great Expectations is an open-source tool designed to automate data validation and documentation, helping data teams maintain data quality by providing robust profiling and quality checks.Core Features

- Automated Data Validation: Define and run data validation checks to ensure your data meets specified expectations.

- Data Profiling: Automatically profile your data to understand its structure and identify potential issues.

- Integration with Various Data Sources: Seamlessly works with data warehouses, data lakes, and other data storage systems.

- Documentation: Generates comprehensive data documentation automatically.

Use Cases

- ETL Pipeline Validation: Ensure data transformations are correct and free of quality issues during the ETL process.

- Data Ingestion Monitoring: Validate incoming data from external sources to maintain consistency and reliability.

- Data Quality Reports: Generate detailed reports on data quality for stakeholders and compliance purposes.

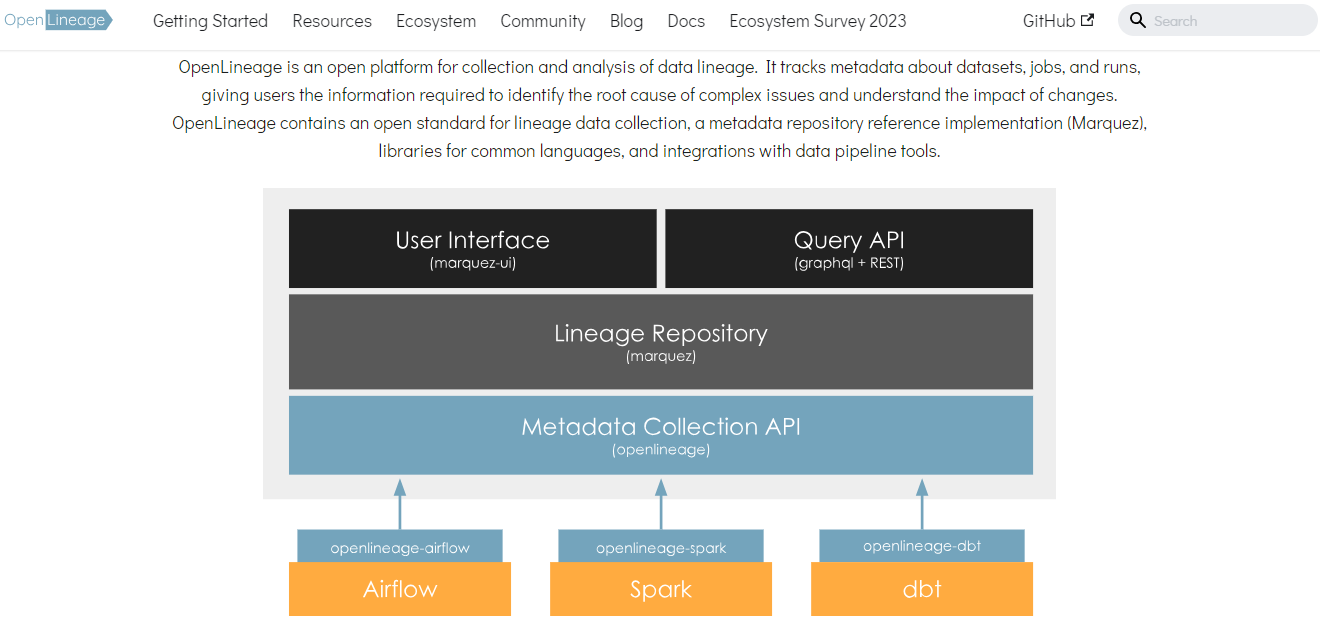

To learn more about Great Expectations, visit their official documentation or explore their GitHub repository. - OpenLineage

OpenLineage is an open-source framework designed for data lineage tracking. It helps data teams understand and visualize the flow of data through their systems, ensuring transparency and traceability.Core Features

- Data Lineage Tracking: Automatically track the movement and transformation of data across various systems.

- Metadata Collection: Collect and store metadata to provide detailed insights into data flow and dependencies.

- Integration with Popular Tools: Seamlessly integrates with data processing tools like Apache Airflow, dbt, and Apache Spark.

- Visualization: Visualize data lineage through intuitive graphs and diagrams.

Use Cases

- Compliance and Auditing: Maintain detailed records of data transformations and movements for regulatory compliance.

- Data Debugging: Quickly identify the source of data issues by tracing data flow and transformations.

- Impact Analysis: Assess the impact of changes in data pipelines on downstream processes and systems.

To learn more about OpenLineage, visit their official documentation or explore their GitHub repository. - Apache Atlas

Apache Atlas is an open-source data governance and metadata management tool designed to help organizations manage their data assets effectively. It provides a framework for data classification, data lineage, and centralized policy enforcement.Core Features

- Data Governance: Classify and categorize data assets for better governance and compliance.

- Data Lineage: Track the origin and movement of data through various processes and transformations.

- Metadata Management: Centralized repository for managing metadata across different data systems.

- Policy Enforcement: Define and enforce policies for data access, usage, and compliance.

Use Cases

- Regulatory Compliance: Ensure adherence to regulatory requirements by maintaining detailed records of data lineage and usage.

- Data Discovery: Enable users to discover and understand the data assets available within the organization.

- Data Quality Monitoring: Monitor data quality by tracking changes and transformations through the data lifecycle.

- Collaboration: Facilitate collaboration between data teams by providing a shared understanding of data assets and their relationships.

To learn more about Apache Atlas, visit their official documentation or explore their GitHub repository. - OpenMetadata

OpenMetadata is an open-source data discovery and metadata management platform designed to streamline data governance, collaboration, and insights across data assets. It integrates with various data sources and provides a comprehensive view of an organization's data landscape.Core Features

- Metadata Management: Centralized management of metadata across different data systems.

- Data Discovery: Powerful search and discovery capabilities to help users find relevant data assets quickly.

- Data Lineage: Visualize the flow of data across the organization to understand data transformations and dependencies.

- Collaboration: Built-in tools for data stewards, analysts, and engineers to collaborate on data projects and governance.

Use Cases

- Data Governance: Implement robust data governance practices by managing metadata and tracking data lineage.

- Data Quality Monitoring: Ensure data quality by monitoring data lineage and understanding data transformations.

- Compliance: Maintain compliance with regulatory requirements by having a clear view of data usage and lineage.

- Operational Efficiency: Improve operational efficiency by enabling data teams to find and utilize data assets effectively.

To learn more about OpenMetadata, visit their official documentation or explore their GitHub repository. - Soda

Soda is an open-source data monitoring platform that focuses on data quality and reliability. It helps data teams detect, diagnose, and resolve data issues quickly, ensuring that data is trustworthy and reliable.Core Features

- Data Quality Checks: Automated checks to validate data against predefined rules and thresholds.

- Anomaly Detection: Identify unusual patterns and trends in data that may indicate quality issues.

- Data Monitoring: Continuous monitoring of data to detect and alert on data quality issues in real-time.

- Integrations: Connects with various data sources, including databases, data warehouses, and data lakes.

Use Cases

- Data Quality Assurance: Ensure data accuracy and consistency by setting up automated quality checks.

- Proactive Issue Resolution: Detects and resolves data issues before they impact downstream processes.

- Data Reliability: Maintain high data reliability standards to support business decision-making.

- Compliance and Auditing: Simplify compliance with data governance policies through continuous monitoring and anomaly detection.

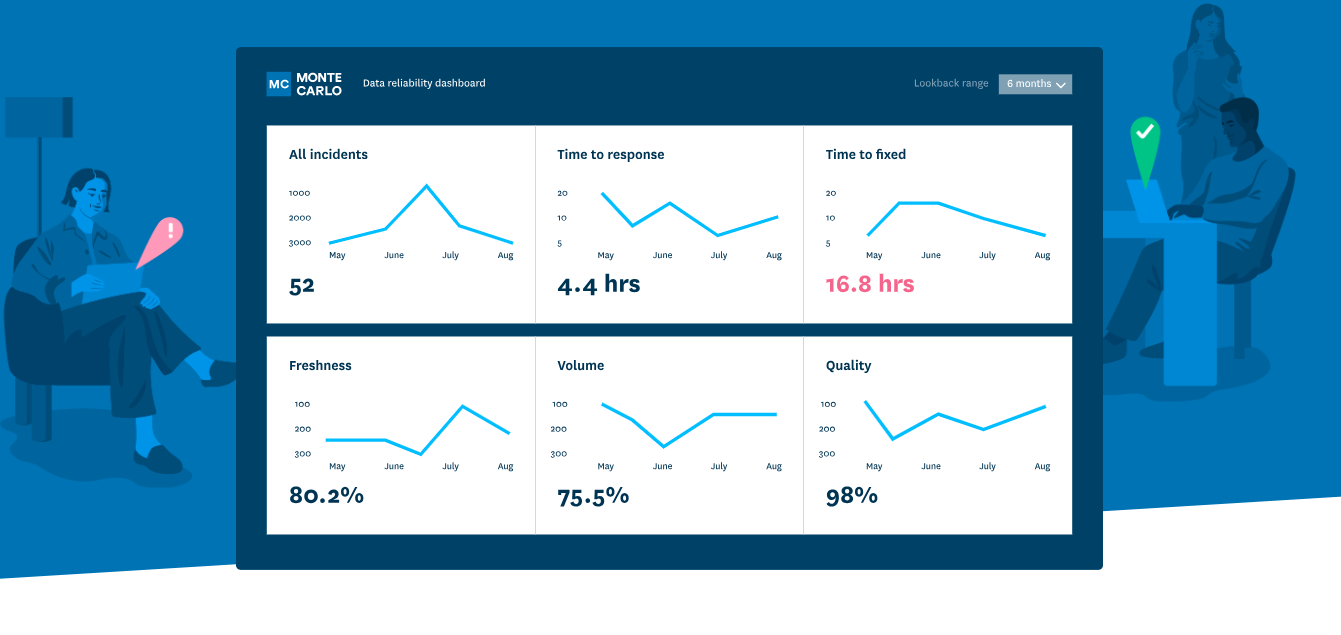

To learn more about Soda, visit their official documentation or explore their GitHub repository. - Monte Carlo

Monte Carlo is a data observability platform designed to help organizations achieve data reliability. It uses automated monitoring and machine learning to detect and resolve data issues across the data pipeline.Core Features

- Automated Data Monitoring: Continuously monitors data for anomalies and changes.

- End-to-End Data Lineage: Visualizes data flow from source to destination to understand the impact of data issues.

- Anomaly Detection: Uses machine learning to identify anomalies in data quality, volume, and schema.

- Incident Management: Provides tools to track, manage, and resolve data incidents efficiently.

- Integrations: Supports integration with various data warehouses, data lakes, and ETL tools.

Use Cases

- Data Quality Management: Ensure data integrity and consistency throughout the data pipeline.

- Root Cause Analysis: Quickly identify and diagnose the root causes of data issues.

- Data Reliability: Maintain trust in data by proactively monitoring and addressing data anomalies.

- Operational Efficiency: Streamline incident management and resolution to minimize downtime and impact on business operations.

To learn more about Monte Carlo, visit their official website or explore their product documentation.Conclusion

Open source tools for data observability offer a powerful, cost-effective way to enhance your monitoring and analysis capabilities. By leveraging these tools, you can achieve comprehensive insights into your systems, ensuring optimal performance and reliability.

Choosing the right tools depends on your specific needs, including the complexity of your infrastructure, the types of data you need to monitor, and your long-term goals.

As you consider enhancing your data observability practices, explore the features and capabilities of these tools to find the best fit for your organizational needs. With the right tools and strategies in place, you can achieve greater data reliability and operational efficiency.

For more information and to start your journey towards improved data observability, visit the official websites and product documentation of these tools.

Author:

The OpenObserve Team comprises dedicated professionals committed to revolutionizing system observability through their innovative platform, OpenObserve. Dedicated to streamlining data observation and system monitoring, offering high performance and cost-effective solutions for diverse use cases.