DataDog vs OpenObserve Part 9: Cost - Datadog Alternative in 2026

Try OpenObserve Cloud today for more efficient and performant observability.

Get Started For Free

There's a lazy assumption in enterprise software: "If a tool costs 90% less, it must be 90% worse."

I get it. In the old world of heavy indexing and massive operational overhead, price usually correlated with capability. If something was cheap, it was probably just a raw log tailer with no brains.

But we wanted to challenge that assumption.

We ran a side-by-side comparison using the OpenTelemetry Demo (16 microservices, Kafka, PostgreSQL, OpenAI and more). We sent the exact same logs, metrics, and traces with the exact same volume to DataDog and OpenObserve simultaneously.

The results weren't just about the bill. They highlighted a fundamental difference in architecture.

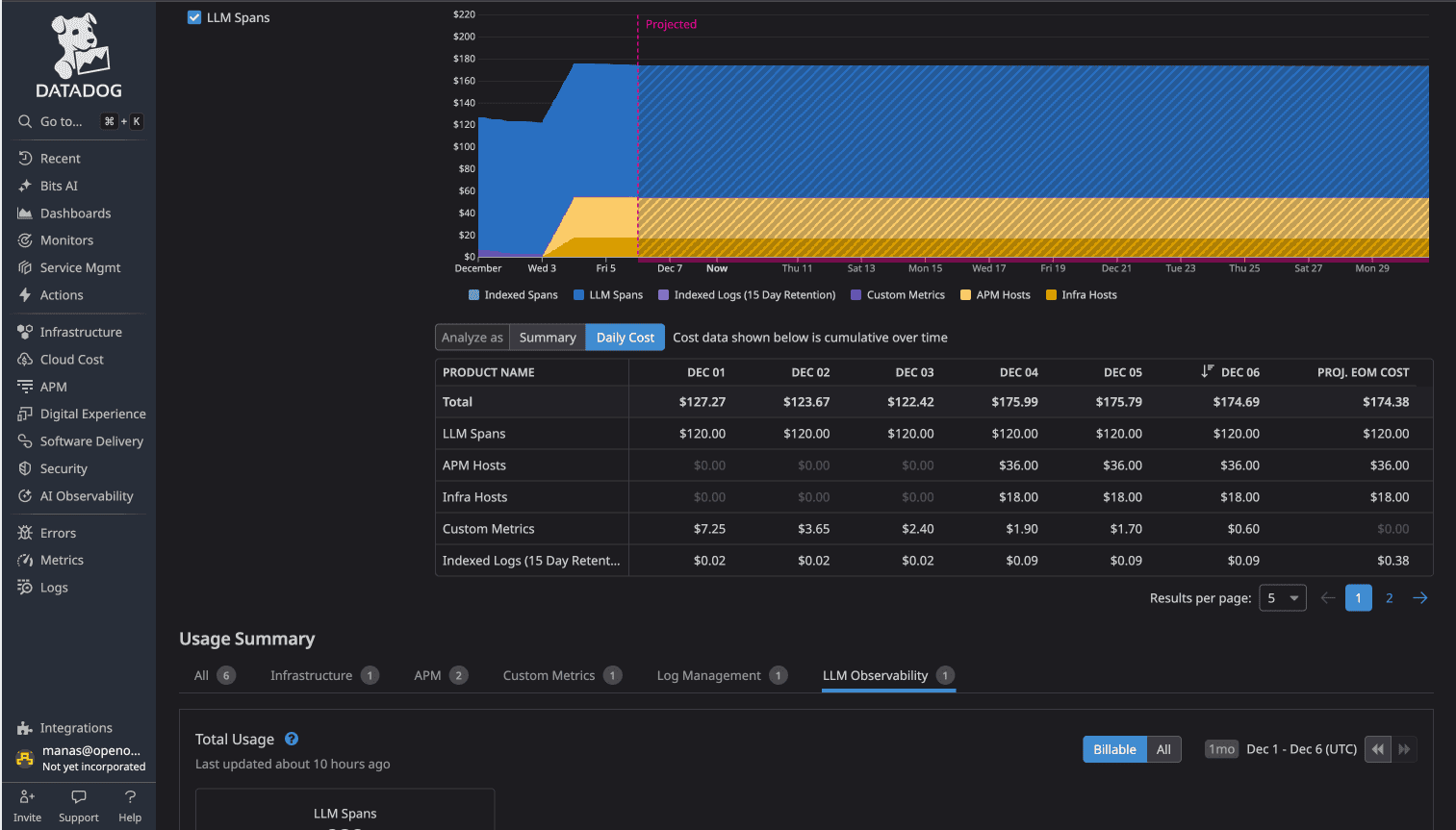

For the exact same dataset:

DataDog: ~$174/day (APM hosts + Infrastructure hosts + LLM observability + Retention tiers) OpenObserve: ~$3.00/day (No per-host or hidden charges, Longer data retention with S3 storage)

That isn't a discount. That's what happens when you build a highly efficient system, decouple compute from storage, and stop charging "per host" tax in a containerized world.

We aren't cheaper because we do less. We're cheaper because the architecture is efficient. On top of that, we're able to offer better functionality.

This is Part 9 in a series comparing DataDog and OpenObserve for observability (security use cases excluded):

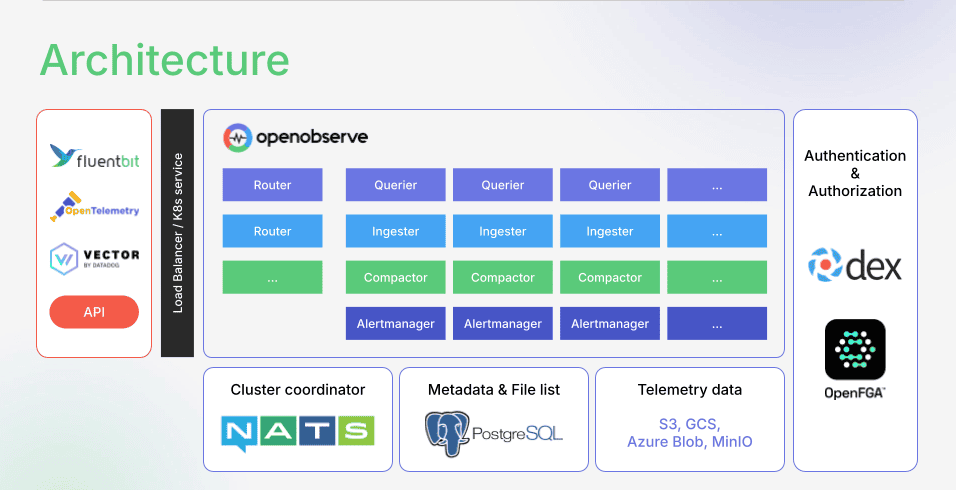

We created a realistic observability test using the OpenTelemetry Demo application—a 16-service e-commerce system called "Astronomy Shop." To make it production-realistic, we extended it with:

All services were auto-instrumented with OpenTelemetry and exported through a single OTel Collector with dual exporters, sending identical data simultaneously to both platforms. Our dataset (published at github.com/openobserve/opentelemetry-demo-dataset):

Telemetry Data:

This setup mirrors production observability: OpenTelemetry instrumentation, centralized collection, vendor-neutral data format.

The fundamental problem isn't that DataDog is expensive. It's that DataDog costs are unpredictable.

DataDog's multi-dimensional pricing—APM hosts, infrastructure hosts, custom metrics, indexed spans, LLM observability, log retention tiers—creates forecasting uncertainty. As our test showed, costs accumulate across five dimensions simultaneously. Custom metrics you didn't explicitly create, premium features that activate automatically, and cardinality that multiplies charges.

Teams using DataDog often limit instrumentation to control costs: fewer metrics, selective tagging, conservative sampling, careful index design. Observability decisions become financial calculations.

The question shifts from "what should we monitor?" to "what can we afford to monitor?"

OpenObserve's pricing model removes this friction. Forecast by data volume, not resource counting. Instrument comprehensively without cost anxiety. Design for operational needs, not billing optimization.

Think about the physics of per-metric pricing: highly sophisticated, well-funded engineering teams dedicate headcount to deleting observability data because the unit economics of their monitoring tool don't work.

Engineers "hunt down high-cardinality custom metrics" not because those metrics lack value, but because keeping them becomes financially prohibitive. Teams spend time removing instrumentation instead of adding it.

This isn't a problem with observability itself. It's an architectural dead end.

Some platforms use S3 for storage to lower their infrastructure costs. But they still charge you for "indexing" to make that data fast, and they charge a premium for every custom metric you track. They use modern storage to lower their costs, but keep the "Index Tax" to protect their margins.

OpenObserve is built on Rust and S3. We use inverted indexes too, but ours are lightweight and efficient. This allows us to handle high cardinality without the penalty. We don't charge extra for custom metrics.

You shouldn't have to delete valuable data to control costs.

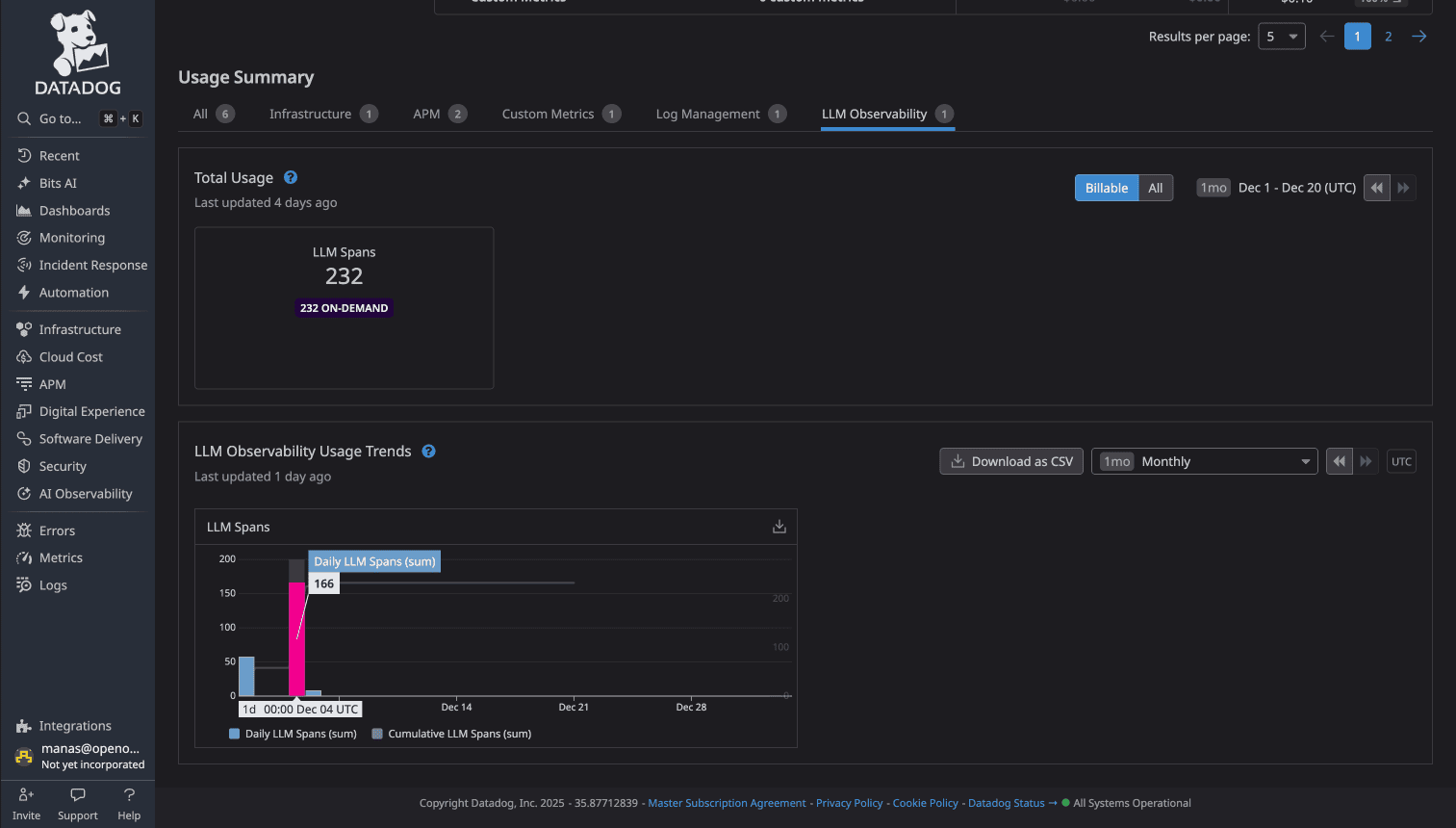

Our test environment included a recommendation service making API calls to OpenAI for product suggestions. DataDog detected LLM span attributes and automatically activated LLM Observability—a premium feature charging $120 per day.

This activation happens without user confirmation. Add an AI feature to your application, and your observability bill increases $3,600/month automatically.

Source: See Part 3: Traces/APM for detailed LLM observability comparison

In OpenObserve, LLM traces are standard traces. No special classification. No premium pricing. The flat $0.50/GB rate applies to all observability data. Adding AI features doesn't add premium charges.

Cost difference: $120/day DataDog vs $0 premium in OpenObserve

DataDog APM charges $36 per month per host (Pro tier). Our 16-service environment with supporting infrastructure counted as multiple APM hosts: frontend, backend services, and containerized workloads.

Source: DataDog APM Pricing

The per-host model creates a problem in containerized environments: adding a new microservice or scaling horizontally multiplies costs, even if that service generates minimal telemetry.

OpenObserve charges by data volume, not host count. Deploy 10 services or 1,000 services—cost scales with the telemetry generated, not the number of containers.

Cost difference: $36/day DataDog APM vs included in $3/day OpenObserve

DataDog Infrastructure Monitoring charges $18 per month per host (Pro tier). Container environments count hosts based on high-water mark: the maximum number of hosts running simultaneously during the month.

Source: DataDog Infrastructure Pricing

This creates unpredictable costs during traffic spikes. Auto-scaling events that spin up additional containers briefly can increase monthly bills significantly.

OpenObserve's flat rate means infrastructure scaling doesn't change costs. Kubernetes auto-scales during Black Friday? Your observability bill stays flat.

DataDog includes 100 custom metrics per host (Pro tier), then charges for additional metrics. The problem: modern applications generate thousands of custom metrics through tags, labels, and high-cardinality dimensions.

Source: See Part 2: Metrics for custom metric pricing impact

Teams restrict tagging to avoid custom metric proliferation. This limits alert coverage: you can't alert on metrics you didn't create due to cost concerns.

OpenObserve provides unlimited metrics with no per-metric charges. Tag freely. Create composite metrics. Monitor every dimension without counting.

Cost impact: Custom metric charges force alert limitations vs comprehensive monitoring

DataDog charges based on retention duration: 15 days, 30 days, 90 days, 1 year. Longer retention multiplies costs.

Source: See Part 1: Logs for retention tier comparison

This forces teams to choose: either delete data early or pay exponentially more for historical analysis. Compliance requirements (SOC 2, HIPAA) often mandate 1-year retention, making long-term storage prohibitively expensive.

DataDog charges for indexed trace spans. Teams configure span sampling to control costs: sample 1% of traces, index only errors, or use intelligent sampling that misses outliers.

Source: See Part 3: Traces/APM for span indexing costs

Sampling creates visibility gaps. That one trace that would explain the production issue? Probably not sampled.

OpenObserve indexes all trace spans by default with no per-span charges. Full trace retention enables comprehensive root cause analysis without sampling gaps.

Cost impact: Span index charges force aggressive sampling vs full trace retention

When you combine all dimensions, the cost difference becomes clear:

| Cost Component | DataDog | OpenObserve |

|---|---|---|

| LLM Observability | $120/day (automatic premium) | $0 (included in flat rate) |

| APM Hosts | $36/day (16-service environment) | $0 (included in flat rate) |

| Infrastructure Hosts | $18/day (container high-water mark) | $0 (included in flat rate) |

| Custom Metric Overages | Variable (beyond 100/host) | $0 (unlimited metrics) |

| Retention Tier Premiums | Variable (time-based multiplier) | $0 (same rate regardless) |

| Indexed Span Charges | Variable (per-span fees) | $0 (all spans indexed) |

| Fixed Daily Cost | $174/day | $3/day |

This isn't theoretical pricing. These are the actual costs from sending identical production-like telemetry to both platforms simultaneously for 30 days.

| Cost Dimension | DataDog | OpenObserve |

|---|---|---|

| Pricing Model | Per-host + Per-metric + Per-span + Time-based retention + Premium tiers | Flat $0.50/GB for all observability data |

| LLM Observability | $120/day automatic premium when LLM spans detected | Standard pricing (no premium) |

| APM Hosts | $36/day (16-service environment) | Included in $3/day flat rate |

| Infrastructure Hosts | $18/day (container high-water mark) | Included in $3/day flat rate |

| Custom Metrics | 100 per host included, then per-metric charges | Unlimited metrics, no per-metric fees |

| Retention Tiers | Time-based pricing (15/30/90/365 days) multiplies costs | Flat $0.10 / 30 days for extended retention |

| Indexed Spans | Per-span charges force sampling | All spans indexed, no per-span fees |

| Cost Predictability | Multi-dimensional pricing creates forecasting uncertainty | Forecast by data volume |

| Total Test Cost | $174/day | $3/day |

| Cost Savings | Baseline | Upto 90% savings |

Evereve, a fashion retail company with over 130 stores nationwide, faced the exact cost unpredictability problem our test revealed.

The Challenge:

Aaron Bell, Principal Systems Engineer & Cloud Architect at Evereve, initially implemented DataDog for monitoring their e-commerce platform and Celerant ERP database. But DataDog's per-metric charges for custom metrics forced the team to constantly question not whether they could monitor something, but whether they should—a decision invariably influenced by cost rather than operational necessity.

To work around these constraints, Bell stood up a parallel monitoring infrastructure using Prometheus with Grafana and Loki for log aggregation. This provided flexibility for custom metrics without associated costs, but created operational overhead maintaining multiple disparate systems. The e-commerce team used New Relic, their subscription service used AppSignal—silos of observability data prevented comprehensive system visibility.

The Solution:

After evaluating multiple observability platforms over a year, Evereve selected OpenObserve. Within 15 minutes, Bell had a full web UI and API ingesting data from multiple sources. OpenObserve's compression capabilities handled 3 terabytes of audit log data compressed to just half a terabyte while maintaining blazing-fast search speeds.

The platform's flat pricing transformed the fundamental question from "should we monitor this?" to "can we monitor this?"—with the answer invariably being yes.

The Results:

As Bell stated: "I don't worry about cost. I don't worry about performance because we really kicked the tires on this—we took it out on the racetrack and ran it around."

Evereve proved that enterprise-grade observability doesn't require enterprise-grade costs or complexity.

Teams can reduce their total cost of observability by 60-90% moving from DataDog to OpenObserve without limiting visibility. The dimensional pricing that makes DataDog bills unpredictable (custom metrics, hosts, retention, indexing, LLM spans) doesn't exist in OpenObserve's flat-rate model.

You don't have to accept that observability costs millions or that visibility means sampling. With OpenObserve, you can see everything at any scale, without compromise.

OpenObserve's OpenTelemetry-native architecture means you instrument once with industry-standard OTel SDKs, export to OpenObserve, and eliminate cost anxiety. No proprietary agents. No feature-based pricing tiers. No automatic premium charges when you add AI features.

The cost difference isn't about sacrificing visibility—it's about architectural efficiency. Decoupled compute and storage, efficient compression, S3-based long-term retention, and elimination of artificial per-resource charges.

For platform engineers managing observability budgets, these differences matter. No cost anxiety about adding instrumentation. No sampling to control span indexing costs. Transparent pricing that scales with data volume rather than infrastructure size.

Evereve achieved 90% cost savings migrating from DataDog to OpenObserve. They didn't sacrifice visibility but instead eliminated pricing complexity. Full instrumentation, longer retention, and accurate cost forecasting became possible.

Your team can achieve similar results.

Send your DataDog bill to hello@openobserve.ai and our team will show you exactly how much you can save moving to OpenObserve Cloud.

Try OpenObserve Cloud with your real telemetry data—see the cost difference firsthand with a free trial.

Schedule a demo to walk through your specific use case and get a customized cost analysis.

Q: How much does OpenObserve cost compared to DataDog?

A: Real test data from our 16-service OpenTelemetry demo shows OpenObserve costs $3/day vs DataDog's $174/day for identical observability coverage (98% cost savings). OpenObserve charges a flat $0.50/GB for all logs, metrics, and traces, while DataDog's multi-dimensional pricing (per-host charges, custom metrics, LLM premium, retention tiers, indexed spans) creates unpredictable costs.

Q: What hidden costs does DataDog have?

A: DataDog's pricing model includes several dimensions that compound: LLM Observability automatically activates when AI spans are detected ($120/day premium), custom metrics beyond 100 per host incur per-metric charges, retention tiers multiply storage costs, and indexed span fees force aggressive sampling. Teams often discover these costs after deployment when bills exceed forecasts.

Q: Can I see everything without sampling in OpenObserve?

A: Yes. OpenObserve's flat $0.50/GB pricing covers full data ingestion and indexing without per-span or per-metric charges. This eliminates the need for sampling, selective tagging, or limiting instrumentation to control costs. The Evereve case study demonstrates year-over-year retention with unlimited custom metrics at >90% cost savings compared to DataDog.

Q: How quickly can I migrate from DataDog to OpenObserve?

A: OpenObserve is OpenTelemetry-native, so if you're already using OTel instrumentation, migration involves reconfiguring the OTel Collector to export to OpenObserve instead of DataDog. Most teams complete initial setup within hours. Send your DataDog bill to hello@openobserve.ai for a customized migration plan and cost analysis.

Q: Does cheaper mean worse capabilities?

A: No. The cost difference comes from architectural efficiency, not feature limitations. OpenObserve provides SQL and PromQL queries (vs proprietary syntax), unlimited custom metrics and high cardinality (vs per-metric charges), full span indexing (vs sampling), and configurable retention (vs time-based pricing tiers). Real test data shows comparable or better capabilities at 98% lower cost.