Comprehensive Guide to Integrating AWS CloudWatch Logs with Amazon Kinesis Firehose

Try OpenObserve Cloud today for more efficient and performant observability.

Get Started For Free

Managing logs efficiently is crucial for monitoring, diagnosing, and optimizing applications in the cloud. AWS CloudWatch Logs integration offers a powerful solution for centralized log collection and analysis. However, by streaming CloudWatch Logs to Kinesis Firehose, you can elevate your log management strategy to a new level, enabling scalable, real-time log streaming for enhanced processing and delivery. In this blog, we’ll walk you through the benefits and a step-by-step guide on how to set up the CloudWatch Logs to Firehose pipeline for improved AWS log management.

In this blog, we will cover:

AWS CloudWatch Logs is an AWS service that is the default destination for most of the AWS services. The seamless integration with other AWS services makes CloudWatch Logs a go-to tool for centralized log management in AWS environments. Cloudwatch Logs is good for basic log search and short-term retention. For better usability and control, you would want to move logs from Cloudwatch to other more capable services like OpenObserve or s3.

Amazon Kinesis Firehose is a fully managed service designed to deliver real-time streaming data to various destinations, such as data lakes, analytics services, and custom HTTP endpoints. By integrating CloudWatch Logs with Firehose, you can stream logs seamlessly to tools like OpenObserve or other destinations, enabling real-time monitoring, analysis, and storage of log data.

To get started, you need to send logs to CloudWatch. Below is a Python script to push sample logs to CloudWatch log group.

import boto3

import logging

import watchtower

# Replace with your AWS region

region_name = 'us-east-1'

# Create a CloudWatch client if needed

cloudwatch_client = boto3.client('logs', region_name=region_name)

# Replace with your log group name

log_group = 'my-log-group'

# Configure logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

# Add CloudWatch as a logging handler

logger.addHandler(

watchtower.CloudWatchLogHandler(

log_group=log_group,

stream_name='my-log-stream', # Replace with your log stream name

use_queues=False # Optional: helps with immediate log transmission

)

)

# Log messages to CloudWatch

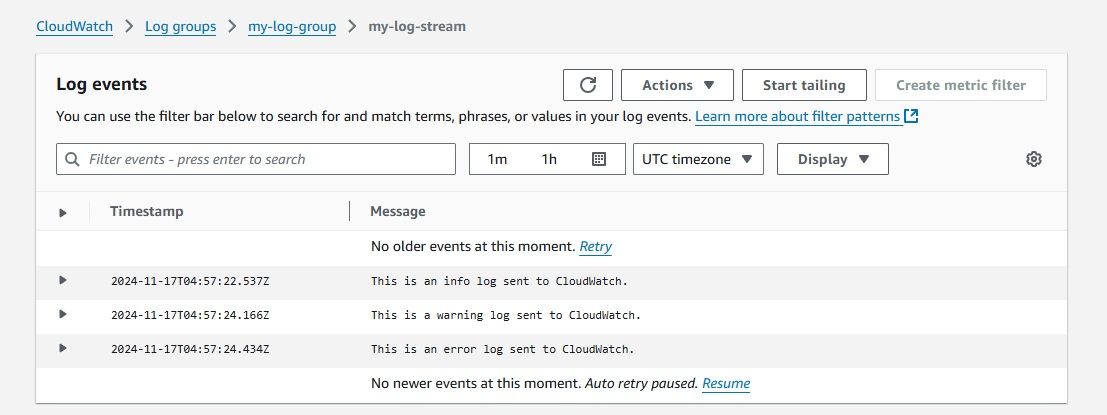

logger.info("This is an info log sent to CloudWatch.")

logger.warning("This is a warning log sent to CloudWatch.")

logger.error("This is an error log sent to CloudWatch.")

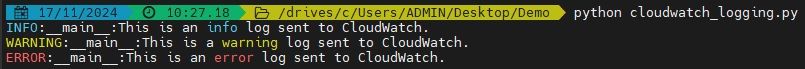

The above script sends Python log messages (INFO, WARNING, ERROR) to AWS CloudWatch by configuring the logging system with a CloudWatch log handler using boto3 and watchtower.

Save the Python script: Save the code to a Python file, e.g., cloudwatch_logging.py.

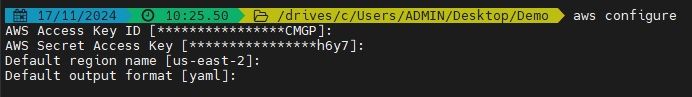

To run this code, make sure you have the required Python libraries installed and AWS credentials configured. Here's how you can do it:

pip install boto3 watchtower

Ensure AWS credentials are set up: Make sure your AWS credentials are configured using the AWS CLI or environment variables (AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY).

Run the script:

python cloudwatch_logging.py

This will execute the script and send the log messages to AWS CloudWatch.

This will execute the script and send the log messages to AWS CloudWatch.

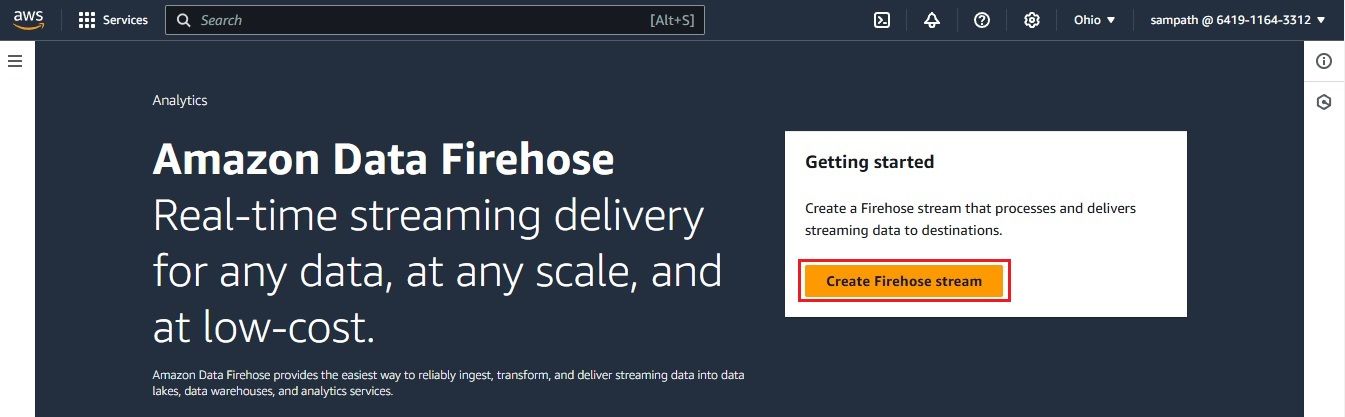

A Kinesis Firehose Delivery Stream is needed to stream real-time data from CloudWatch Logs to destinations like OpenObserve. It ensures reliable data delivery and backup. Here's how to create one:

Go to the Amazon Kinesis Console and select Create delivery stream to begin the setup.

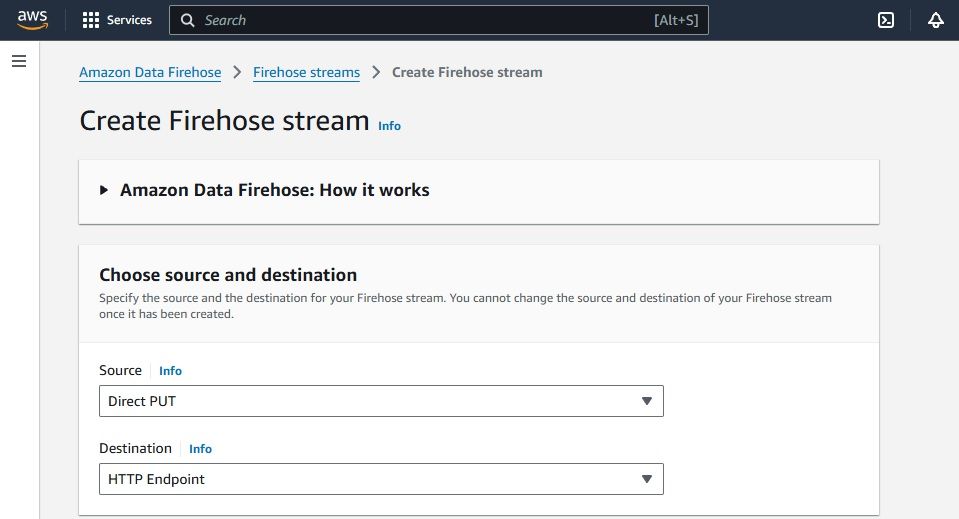

Select Direct Put as the source to receive data directly from CloudWatch Logs and HTTP endpoint as the destination to send data to your OpenObserve endpoint.

Select Direct Put as the source to receive data directly from CloudWatch Logs and HTTP endpoint as the destination to send data to your OpenObserve endpoint.

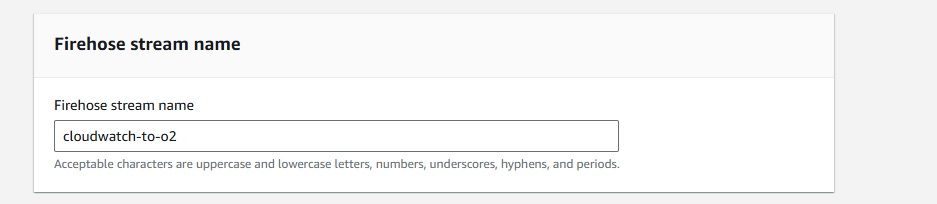

Provide a name for your delivery stream (e.g., cloudwatch-to-o2).

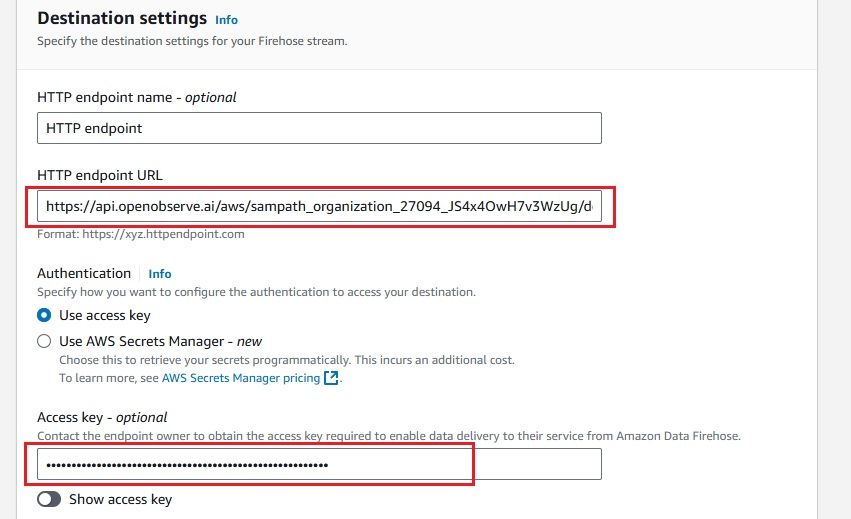

Enter the HTTP Endpoint URL and select Use access key for authentication.

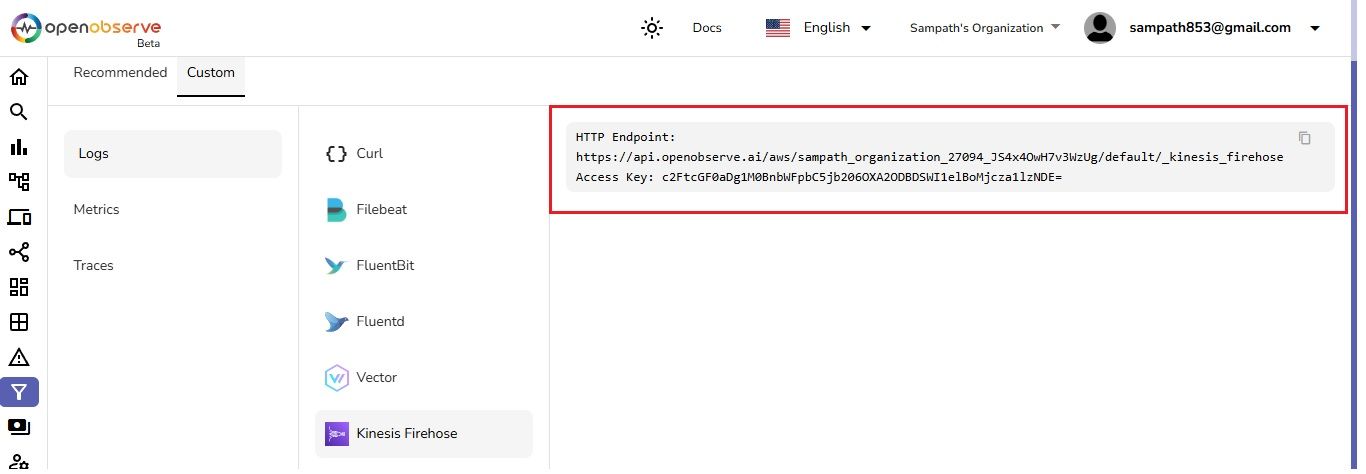

You can find the HTTP Endpoint URL and access key for authentication in OpenObserve under Ingestion > Custom > Logs > Kinesis Firehose.

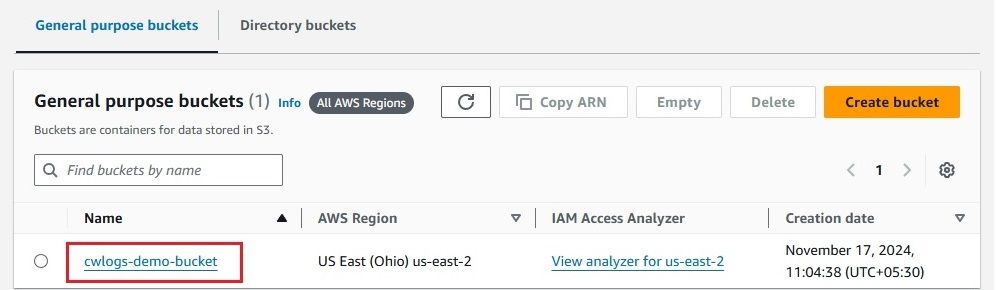

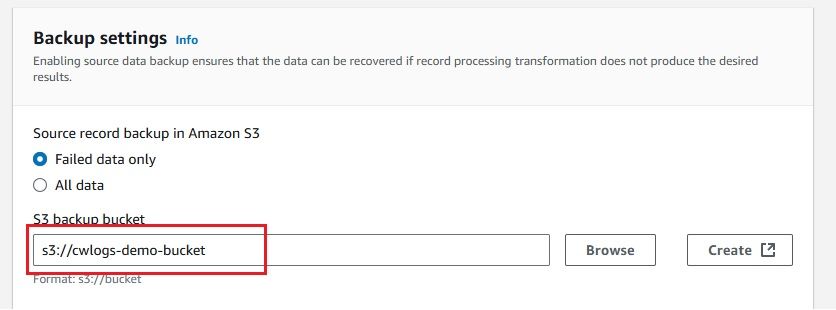

In the Backup settings, choose Source record backup in Amazon S3 for either Failed data only or All data, and select an S3 bucket. You can browse and select an existing bucket or create a new one and select it.

I have created a bucket named cwlogs-demo-bucket for storing backup data.

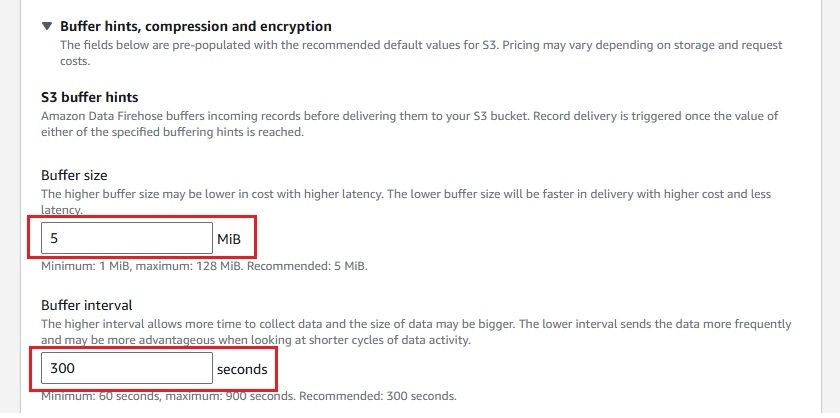

Configure Batching Options: During the Firehose stream creation process, you'll see options related to data batching. Kinesis Firehose has default batching settings where data is sent either every 300 seconds or when the batch reaches 5 MB of data receipt, whichever comes first.

This is an important aspect of how Firehose handles data delivery, so make sure to configure it properly based on your use case.

This is an important aspect of how Firehose handles data delivery, so make sure to configure it properly based on your use case.

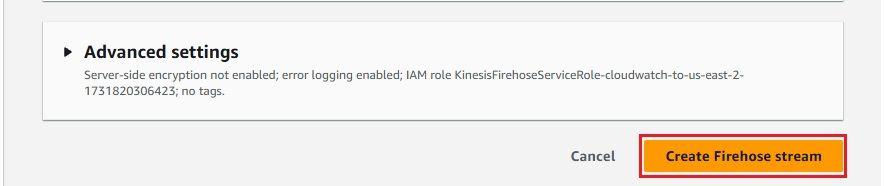

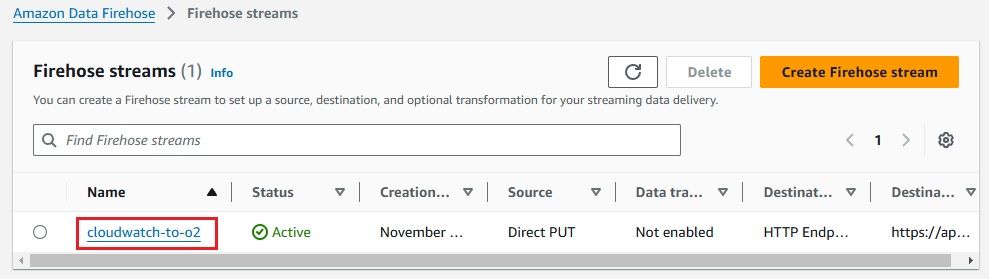

Click Create Firehose stream to finish the setup.

Once completed, you will see a Firehose stream named cloudwatch-to-o2 created.

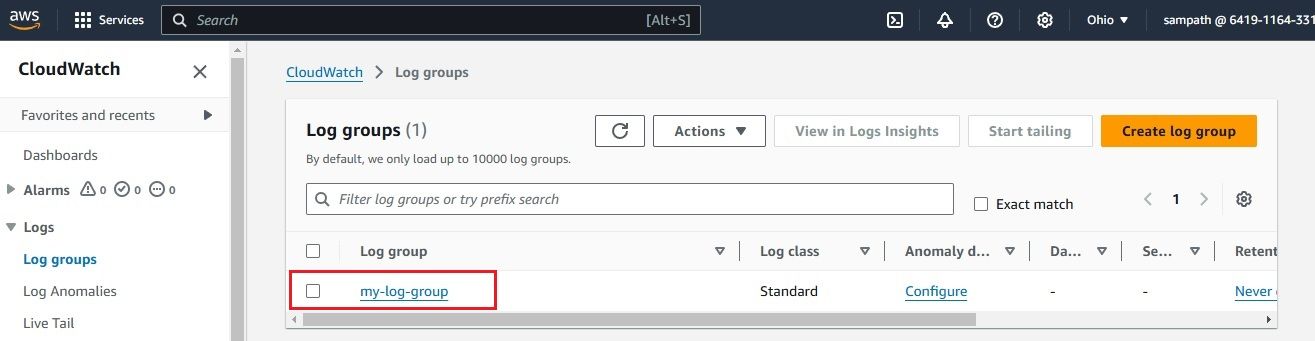

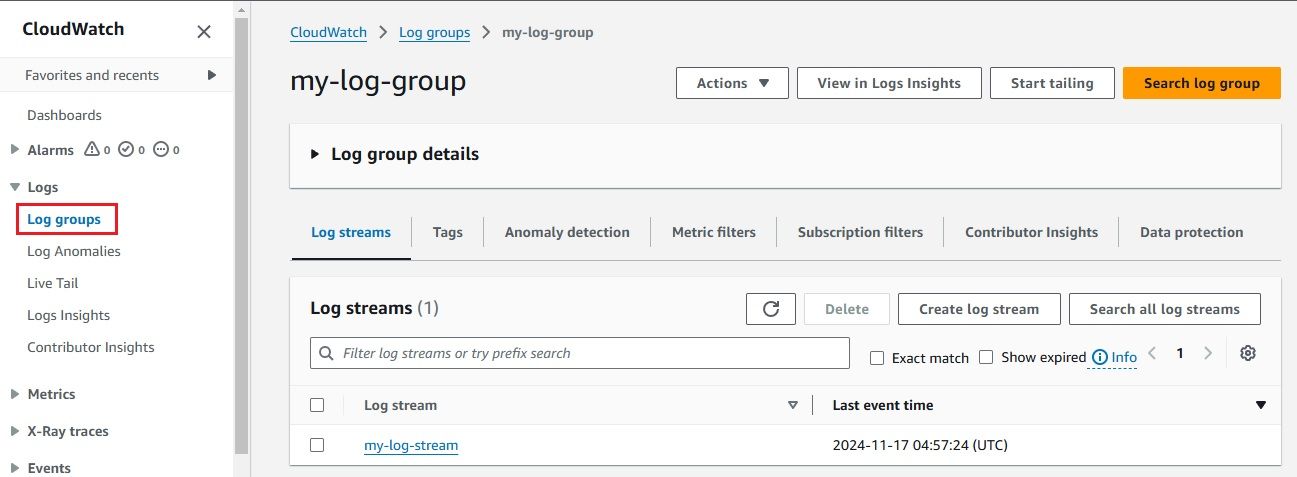

A subscription filter is needed to route logs from CloudWatch to Firehose. Here's how to create one:

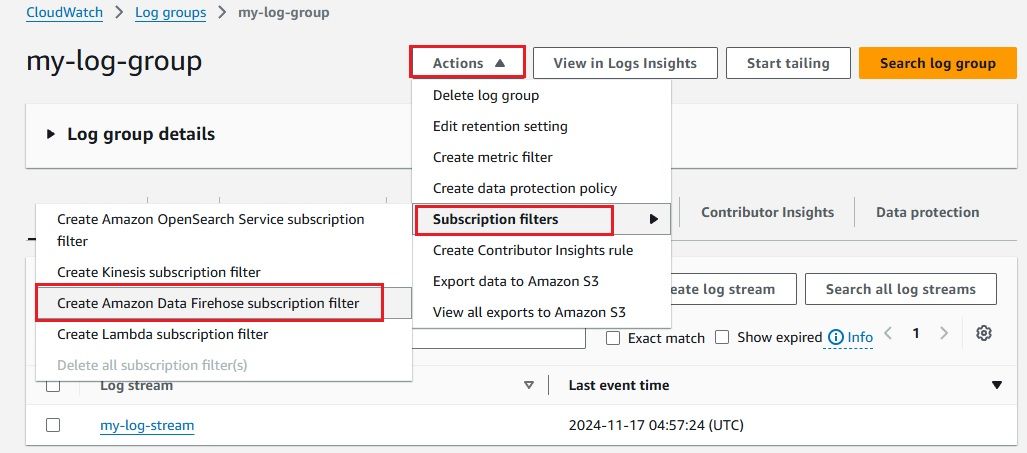

Navigate to CloudWatch in the AWS Management Console and select the log group you want to stream; in our case, it's "my-log-group".

Click on Actions and select Create subscription filter. In the Destination section, choose Create Amazon Data Firehose Subscription filter

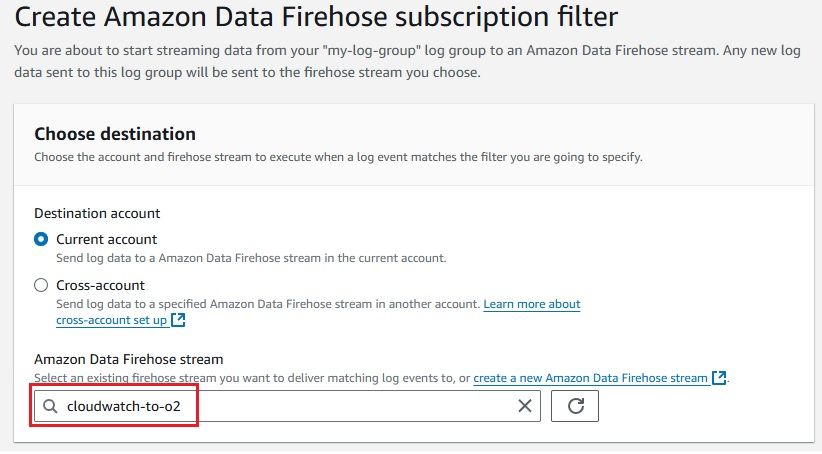

Under Choose destination, select Current account since we have previously created an Amazon Kinesis Data Firehose stream named "cloudwatch-to-o2" in the same account. Then, select the cloudwatch-to-o2 stream.

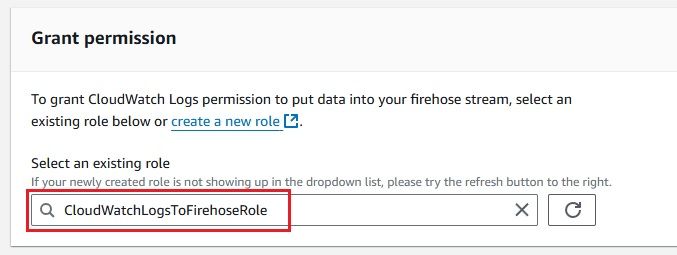

Under Grant permission, we need to choose a role that allows CloudWatch Logs to send data to the Firehose stream we previously set up.

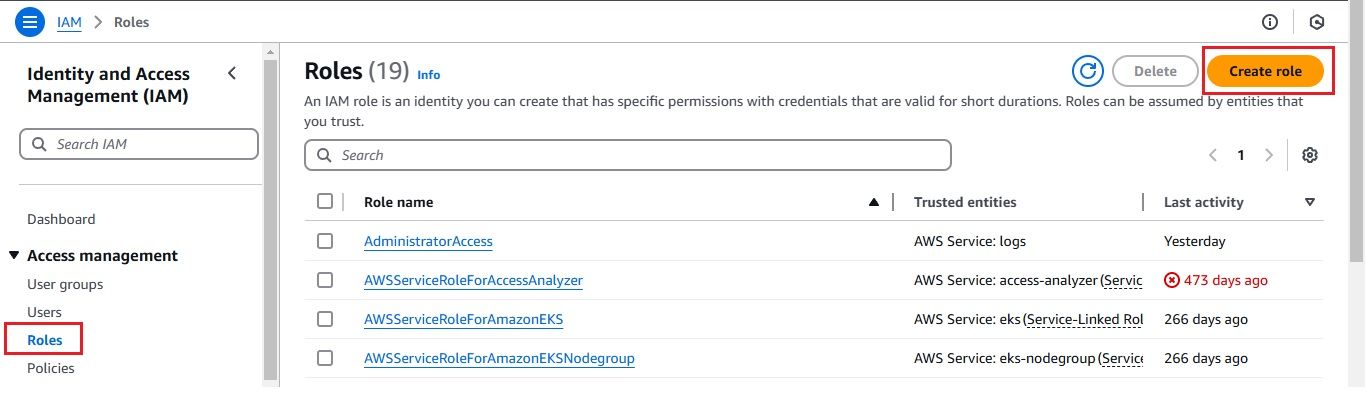

To create CloudwatchLogsToFirehoseRole, go to IAM in the AWS Console, click Roles, and then Create role to set up permissions for CloudWatch Logs to send data to Firehose.

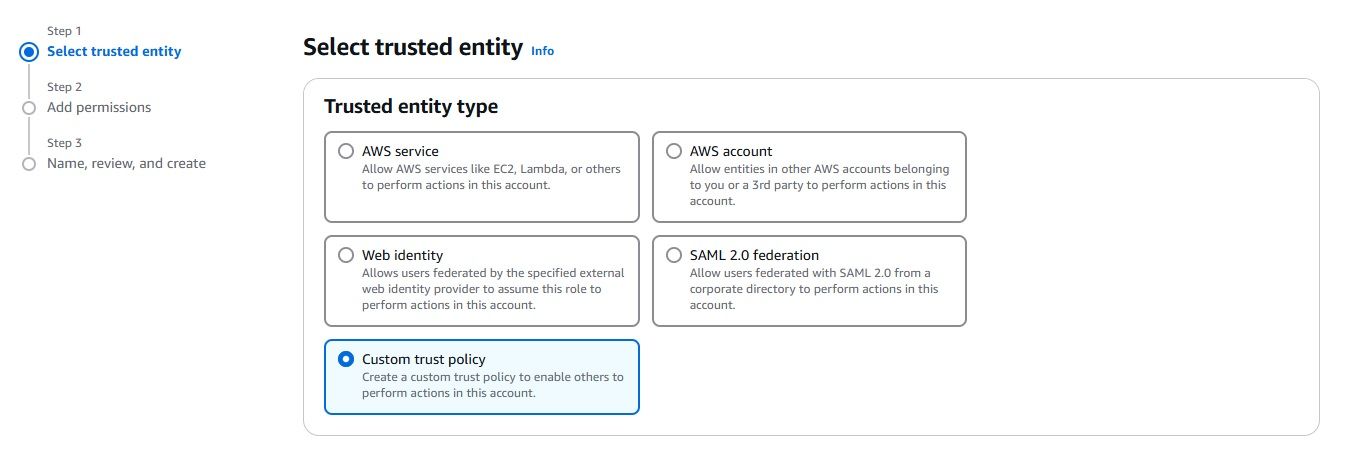

Next, In the Select trusted entity section, choose Custom trust policy

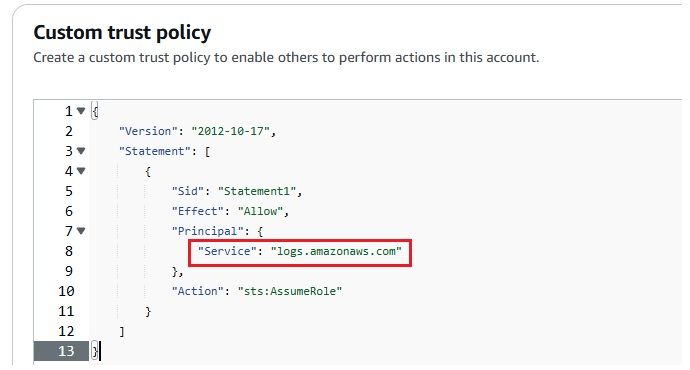

Define the Custom Trust Policy to allow CloudWatch Logs to assume the role, you need to set the Principal as logs.amazonaws.com

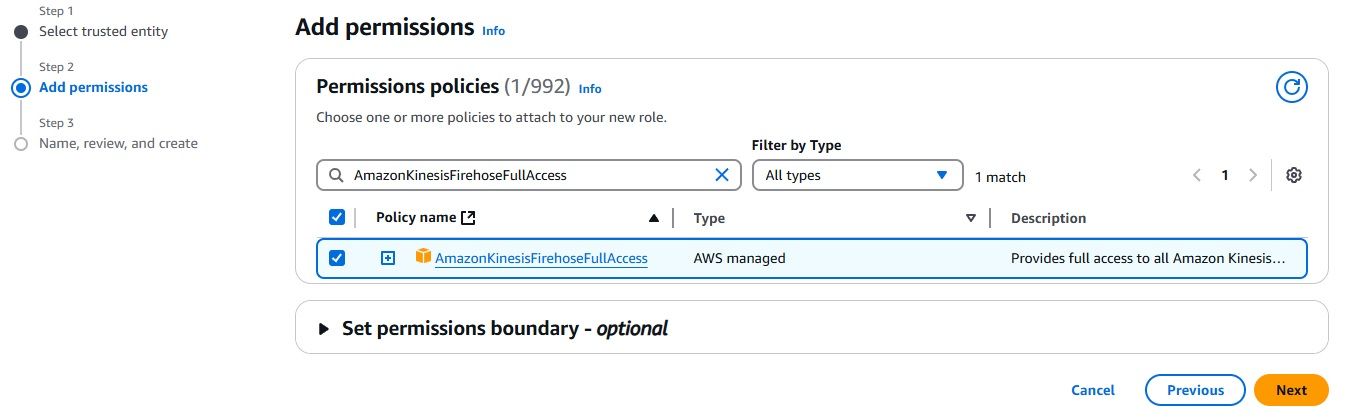

Once you've entered the trust policy, click Next: Permissions to proceed. Now, you'll need to attach the necessary permissions policies that allow CloudWatch Logs to interact with other AWS resources like Kinesis Firehose.

We need to grant the firehose:PutRecord permission, but for simplicity, I am attaching the AmazonKinesisFirehoseFullAccess policy.

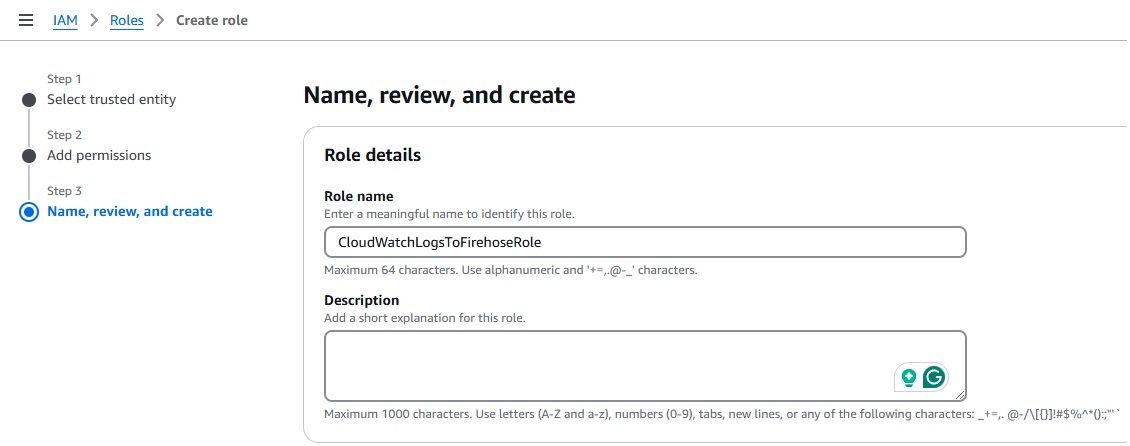

Now, name the role and click Create role to complete the setup.

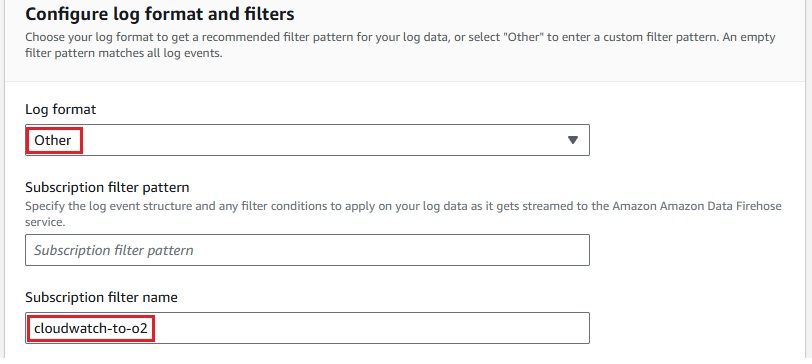

In Configure log format and filters, select Log format: Other since our log is plain text. Name the subscription filter cloudwatch-to-o2

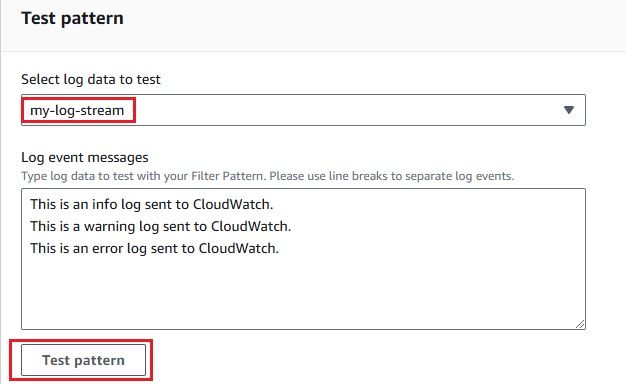

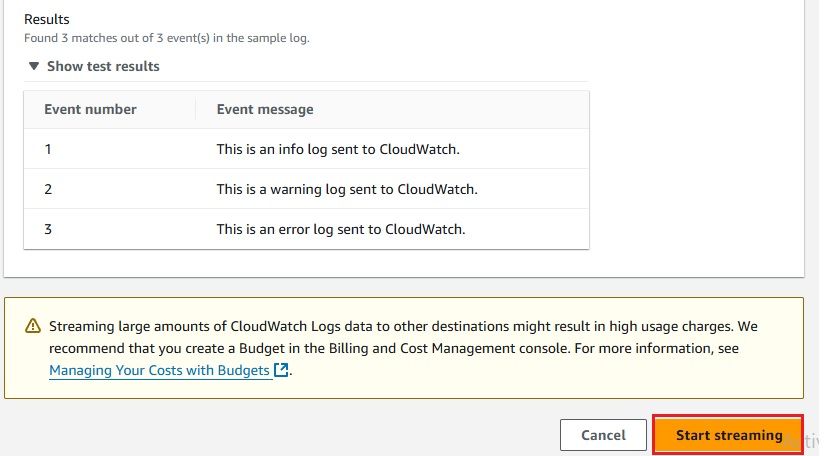

Under Test pattern, you can choose log data and test the pattern to preview the outcome

Once you're satisfied with the outcome, click Start streaming to begin the process

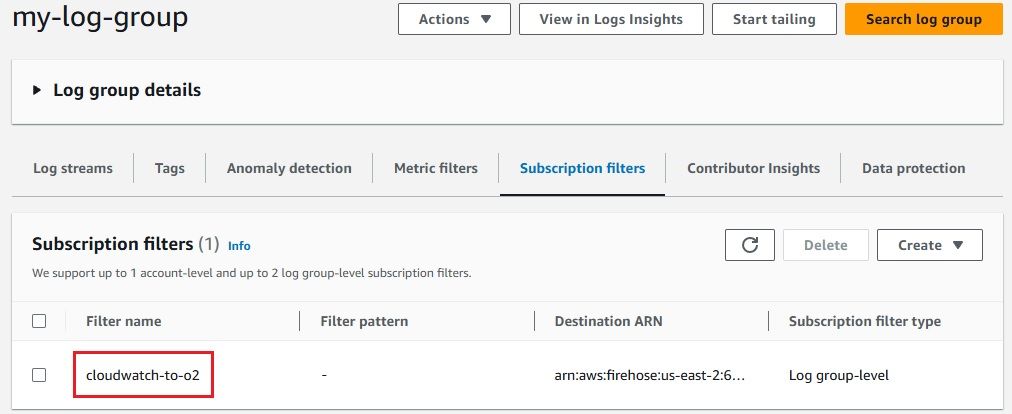

you'll have successfully created the subscription filter

As we have set up the integration between AWS CloudWatch Logs and Kinesis Firehose, it’s time to check whether the logs are successfully flowing into OpenObserve.This step is crucial to ensure that your log data is being ingested and processed correctly.

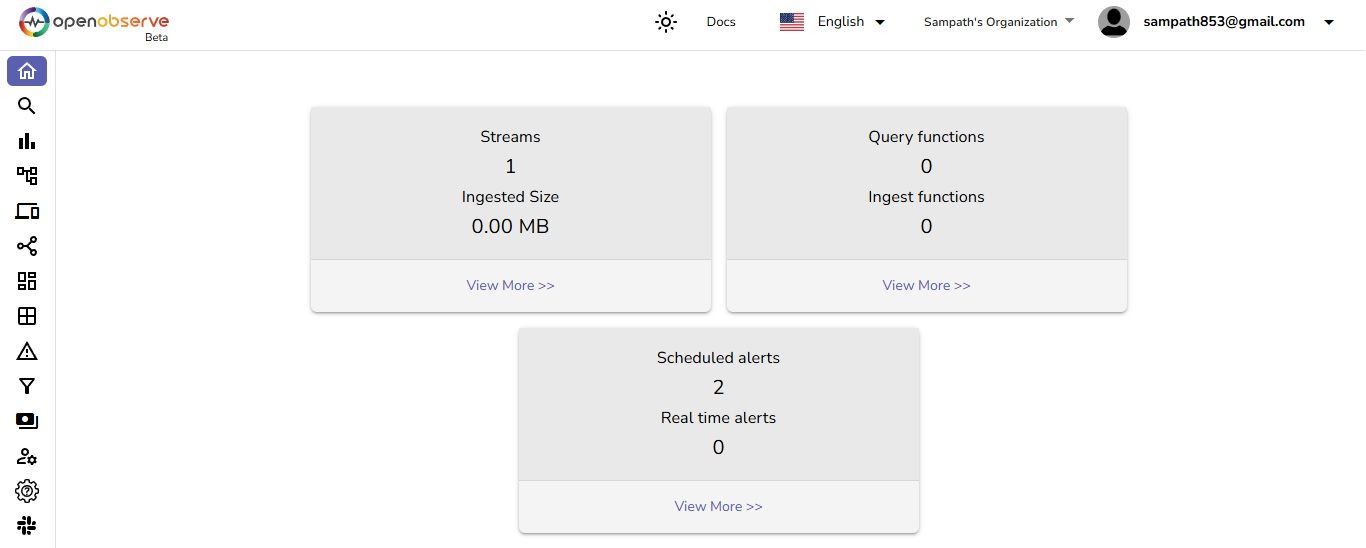

From the OpenObserve home page, we can see that logs in one stream has been ingested

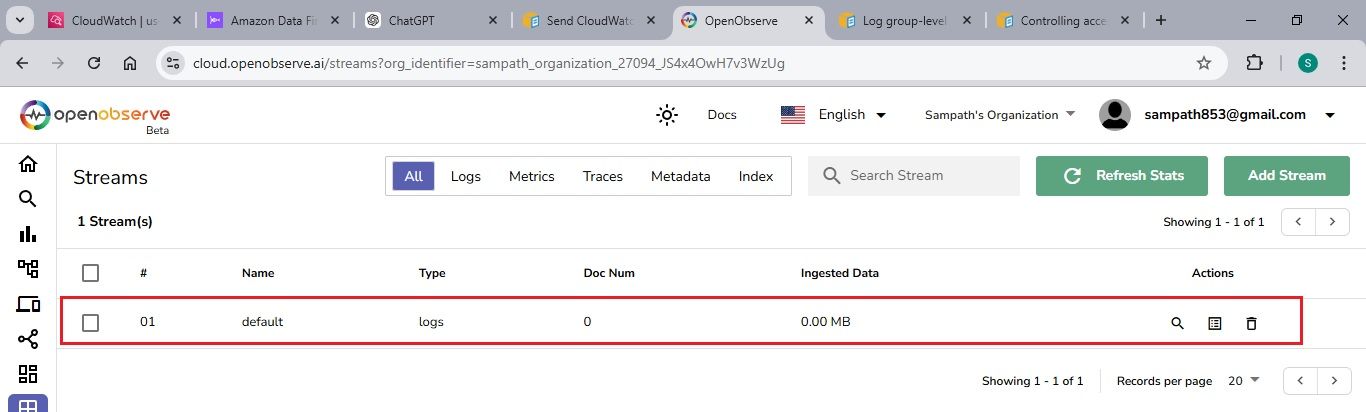

You can also click on Streams to view a list of all available streams in OpenObserve.

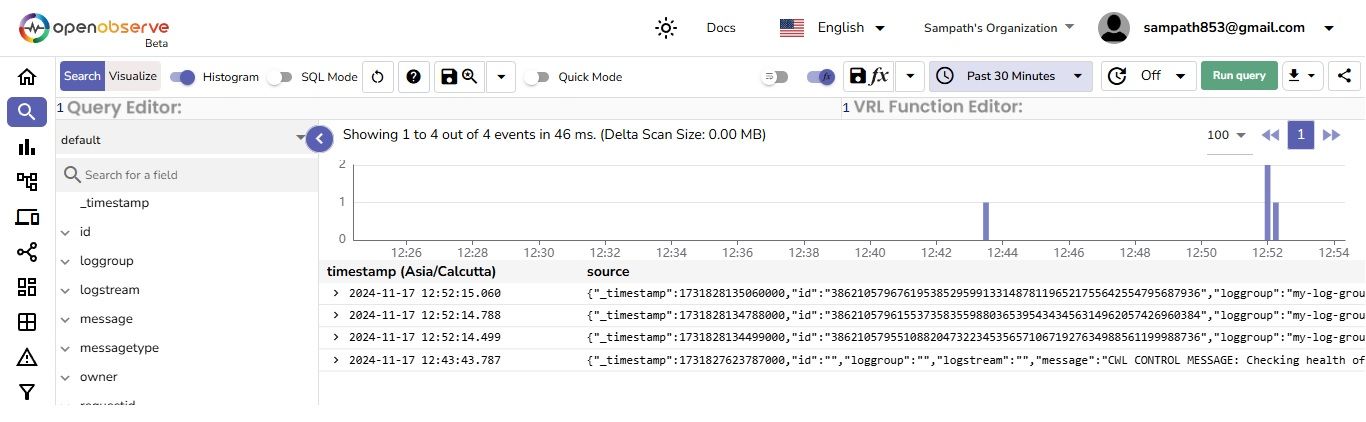

Now, let's go to the Logs section, select the default stream, and click on Run query to view the logs.

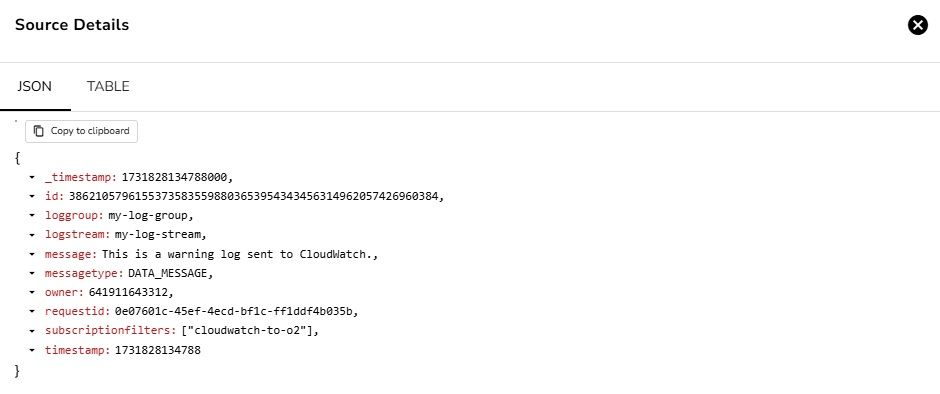

Click on any record to see the details of that specific log

Now that your logs are flowing into OpenObserve, it’s time to visualize the data and make it actionable.

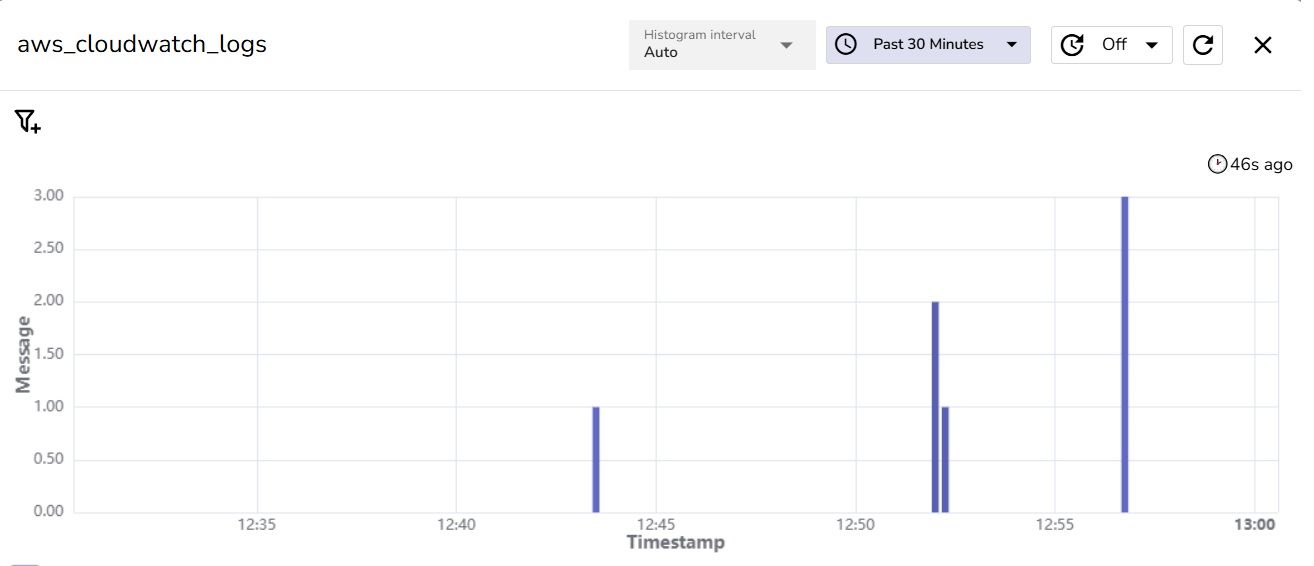

The dashboard shows log events over time, with bursts of activity around 12:45 and 12:55, and no logs in between.

| Feature | OpenObserve (O2) | AWS CloudWatch |

|---|---|---|

| Deployment Flexibility | Self-hosted on-prem, edge, or cloud for low-latency | cloud-based, AWS-centric |

| Customization & Control | Full control and customization available | Limited customization, AWS-bound |

| Cost Efficiency | Open-source, cost-effective, scalable | Costs scale quickly with data volume |

| Edge Support | Supports true edge deployments | Limited to AWS-specific edge services |

| Open-Source Ecosystem | Integrates with open-source tools (e.g., Prometheus) | Constrained to AWS integrations |

| Data Ownership | Full data control in self-hosted setups | Data stored in AWS, potential privacy concerns |

| UI & Querying Capabilities | Much better UI and querying capabilities | Standard UI and querying capabilities |

Integrating AWS CloudWatch Logs with Kinesis Firehose and OpenObserve streamlines log management by centralizing log data, enabling real-time monitoring, and providing powerful log analysis. This setup ensures quick issue detection and efficient troubleshooting. With OpenObserve, you can visualize trends, monitor performance, and scale as your log volume grows. It makes managing logs more efficient, improving overall application health and operational insights.