How to deploy OpenObserve on Amazon EKS with ALB ingress

Try OpenObserve Cloud today for more efficient and performant observability.

Get Started For Free

If you are considering a self-managed production deployment of OpenObserve on AWS that requires scalability, security, high availability, ease of operations, and simple upgradability, Amazon Elastic Kubernetes Service (EKS) emerges as a strong candidate.

EKS, being fully managed by AWS, simplifies the management of the Kubernetes control plane, allowing you to focus on your applications. It offers built-in scalability features like Horizontal Pod Autoscaling and Cluster Autoscaler, integrates seamlessly with other AWS services for enhanced security, and ensures high availability through multi-AZ deployments. Additionally, EKS eases operations with a managed control plane and supports straightforward upgradability through version management and automated policies. This makes EKS an ideal choice for deploying OpenObserve in a production environment on AWS.

Prabhat has created an excellent video walkthrough in the getting started section to deploy the solution, which I highly recommend. Combine that with this github link and you can get a basic deployment going quickly.

Note: the costs associated by deploying this solution should be considered prior, adjusting the EKS managedNodeGroups may be required to suit your needs.

The deployment leaves you with a cluster that requires port-forwarding to accessing services and does not expose the services externally. This blog I will focus solely on exposing OpenObserve services externally.

An ALB is a good choice for flexible application-level traffic management and routing. It can be used for SSL termination and offload , session persistence, and content-based routing. NLB on the other hand offers higher-performance, low-latency, scalable network-level balancing and reliability hence NLB is recommended for OpenObserve. This post however covers ALB as NLB deployment IaC already available in the github repo.

EKS has two ways to expose Services to clients external to the cluster. Kubernetes Service of type LoadBalancer or Ingress resources.

There are two key methods of provisioning load balancers within an EKS Cluster. Leveraging the AWS Cloud Provider Load balancer Controller (legacy, only receiving critical bug fixes) Leveraging the AWS Load Balancer Controller (recommended)

We will go by the recommended option to use AWS Load Balancer Controller and deploy by following AWS documentation.

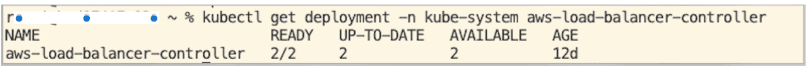

After successful completion of this step you should be able to see something like this:

Its good to be aware that controller offers an IngressGroup feature that enables you to share a single ALB with multiple Ingress resources. This eliminates the need to have dedicated ALBs for every service. However depending on the volume of ingested data you may want to dedicate an ALB for this service which I have done.

Another consideration is while choosing Load Balancer Target-Type. There are 2 types of targets you can register in the target group: Instance & IP. I have made a decision to use IP as target due to following reasons: Traffic from the Load Balancer is forwarded directly to the pod, eliminating extra hops of Worker Nodes and Service Cluster IP, Reduces latency The Load Balancer’s health check is directly received and responded to by the individual pods

I will also be utiliZing the ALB endpoint to send logs via Amazon Data Firehose hence there is a need to use valid SSL certs on the ALB for TLS termination and DNS FQDN to match with the Certificate Common Name

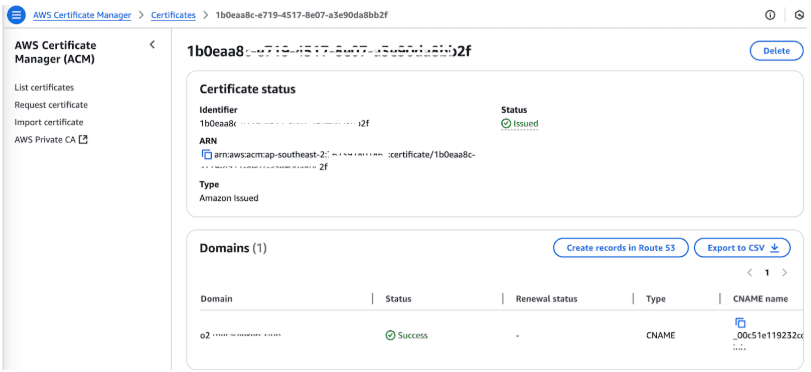

The domain name chosen is o2.blog.openobserve.ai and the corresponding cert is generated by Amazon ACM.

After successful completion of this step you should be able to see something like this:

Copy the certificate ARN to be used later.

Finally in Step#4 we will tie it all together by updating values.yaml and update the deployment using helm (Only changed/ relavent details showed and this is not the entire values.yaml file)

serviceAccount:

create: true

annotations:

eks.amazonaws.com/role-arn: arn:aws:iam::<accountid>:role/OpenObserveRole

name: ""

config:

ZO_WEB_URL: "https://o2.blog.openobserve.ai"

service:

type: ClusterIP

# type: LoadBalancer

http_port: 5080

grpc_port: 5081

report_server_port: 5082

ingress:

enabled: true

className: "alb"

annotations:

cert-manager.io/issuer: letsencrypt

kubernetes.io/tls-acme: "true"

alb.ingress.kubernetes.io/target-type: ip

alb.ingress.kubernetes.io/scheme: internet-facing

alb.ingress.kubernetes.io/healthcheck-path: /web/

alb.ingress.kubernetes.io/backend-protocol: HTTP

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}, {"HTTPS": 443}]'

alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:ap-southeast-2:<accountid>:certificate/1b0eaa8c<SNIP>2f

alb.ingress.kubernetes.io/ssl-policy: ELBSecurityPolicy-TLS13-1-2-2021-06

hosts:

- host: o2.blog.openobserve.ai

paths:

- path: /

pathType: Prefix

backend:

service:

name: o2-openobserve-router

port:

number: 5080

tls:

- secretName: o2.blog.openobserve.ai

hosts:

- o2.blog.openobserve.ai

Perform a Helm upgrade to make the updated values.yaml effective

helm --namespace openobserve -f values.yaml upgrade o2 openobserve/openobserve

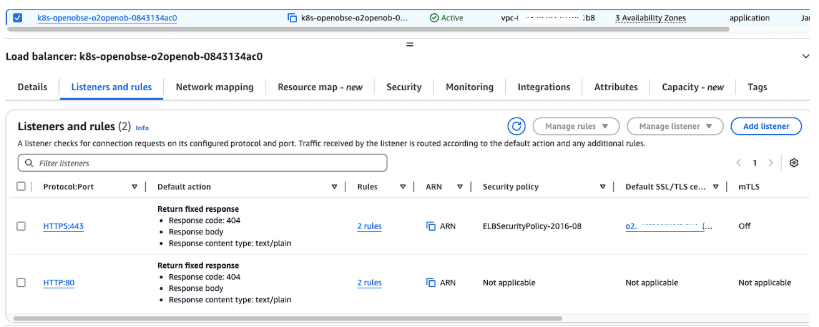

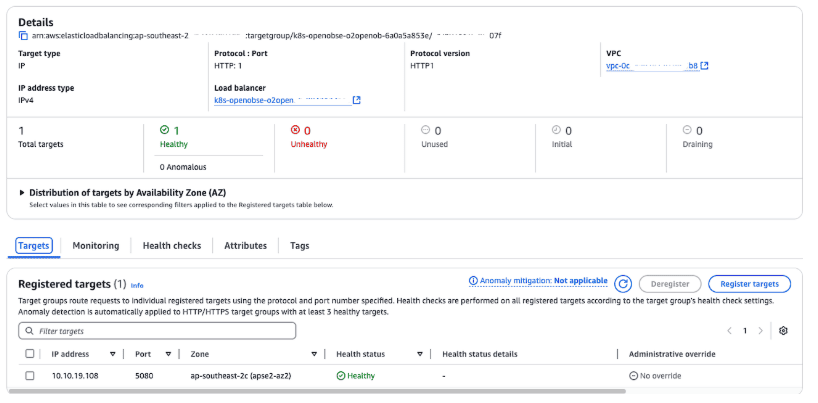

You should see a ALB deployed with Listeners

Add target group with registered and healthy IP targets

Everything looks like working fine, we can try accessing the console via https://o2.blog.openobserve.ai And use HTTP Endpoint to ingest logs, in this case I am ingesting logs via Amazon Data firehose https://o2.blog.openobserve.ai/aws/default/default/_kinesis_firehose

In this blog we covered how you can deploy OpenObserve on Amazon EKS with ALB ingress with a valid public certificate.

Get Started with OpenObserve Today!

Sign up for a free trial of OpenObserve on our website. Check out our GitHub repository for self-hosting and contribution opportunities.