Azure Monitoring with Otel Collector and OpenObserve: Collect Logs & Metrics from Any Resource

Try OpenObserve Cloud today for more efficient and performant observability.

Get Started For Free

Cloud monitoring on Azure often feels fragmented. Every service exposes its own knobs- Activity Logs, Diagnostic Settings, Resource Logs, Metrics, Insights, Agent-based Logs, … and the moment you onboard 3-4 services, your pipeline becomes a mess.

But the truth is: All Azure resources can be monitored using one generic architecture.The only thing that changes is which logs/metrics each resource exposes.

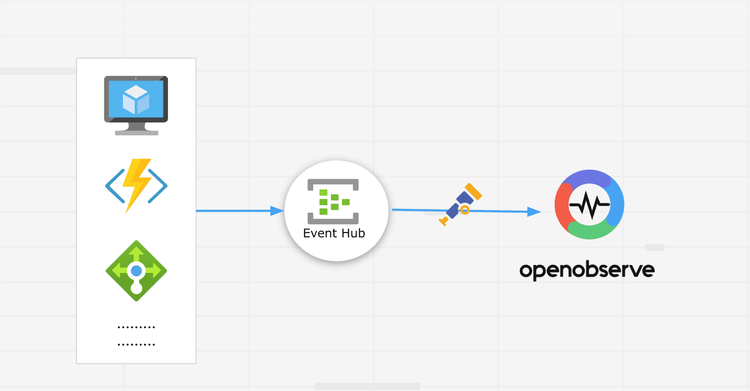

This article breaks down that generic architecture, shows how Azure emits logs/metrics, and how you can collect all of them using Event Hub → OTel Azure EventHub Receiver → OpenObserve, without installing extra agents or writing glue code.

Azure resources fail in ways the Portal doesn’t clearly show. A VM can look healthy while the disk is saturated, a database can throttle without any obvious warning, storage accounts quietly start returning 429s, and a single NSG rule change can break traffic with zero visibility.

Azure Monitor helps, but it’s split across multiple blades, varies by service, and gets expensive fast if you rely on Log Analytics for everything. What teams actually want is one place where all logs and metrics land in a consistent format.

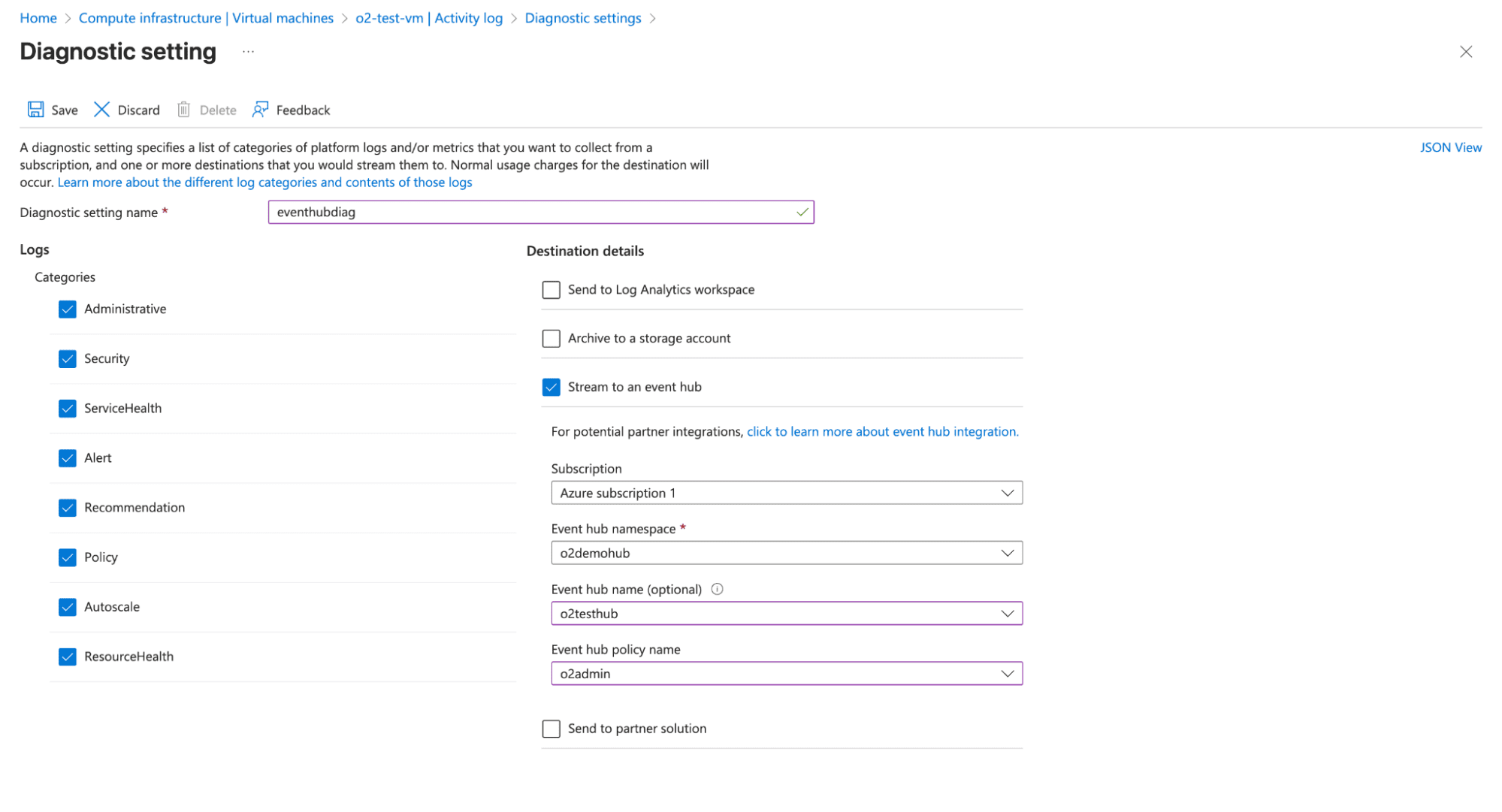

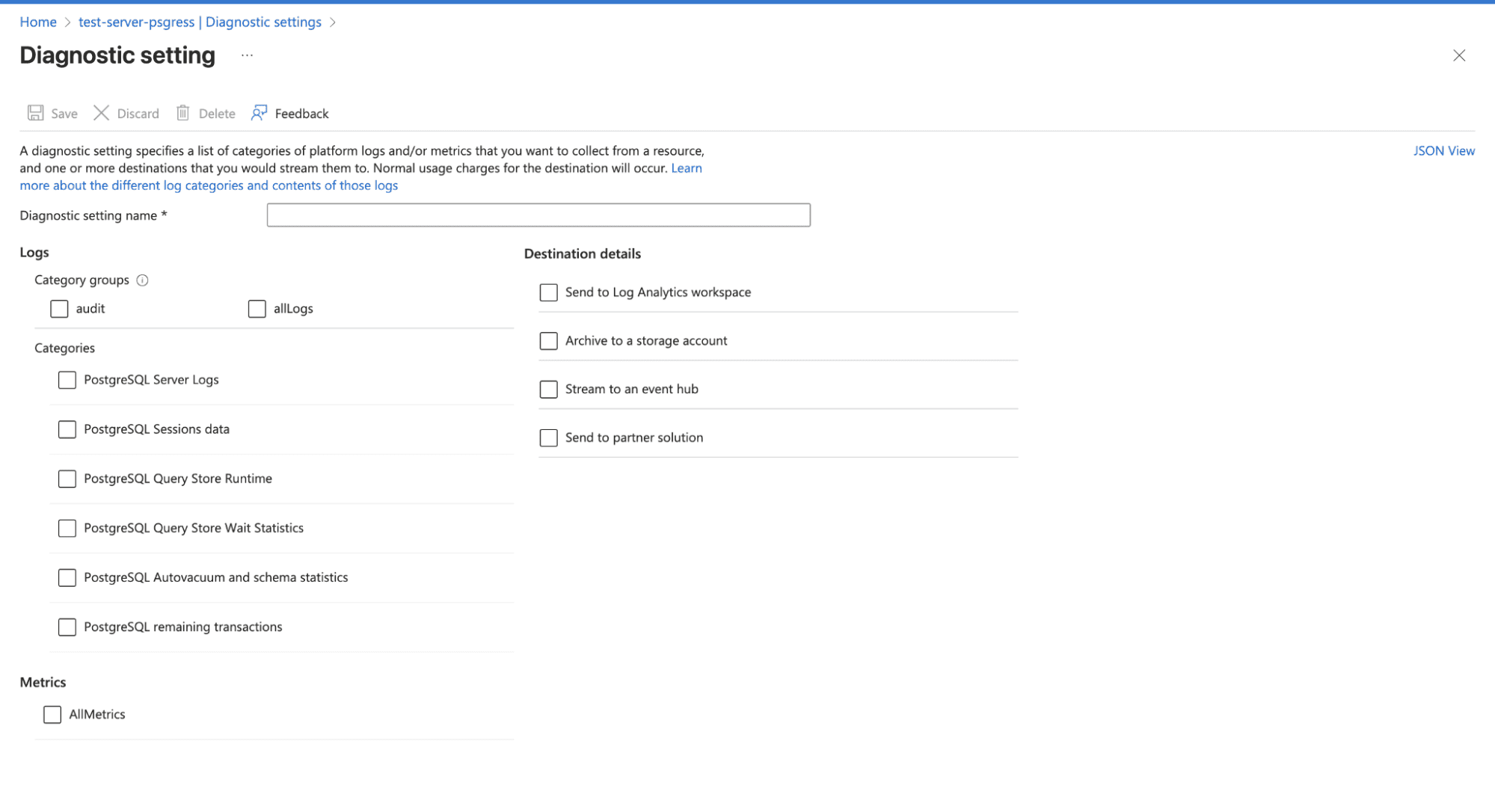

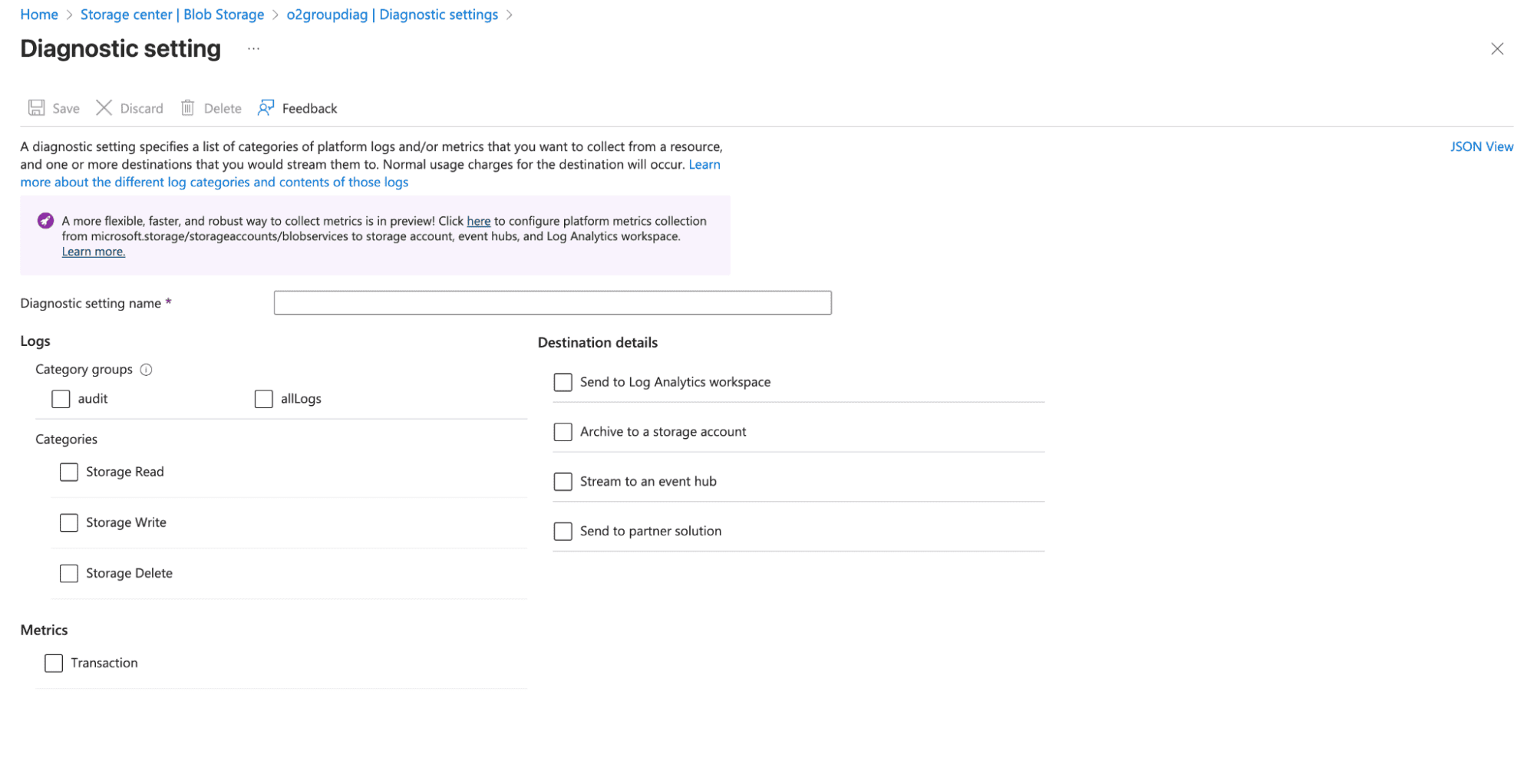

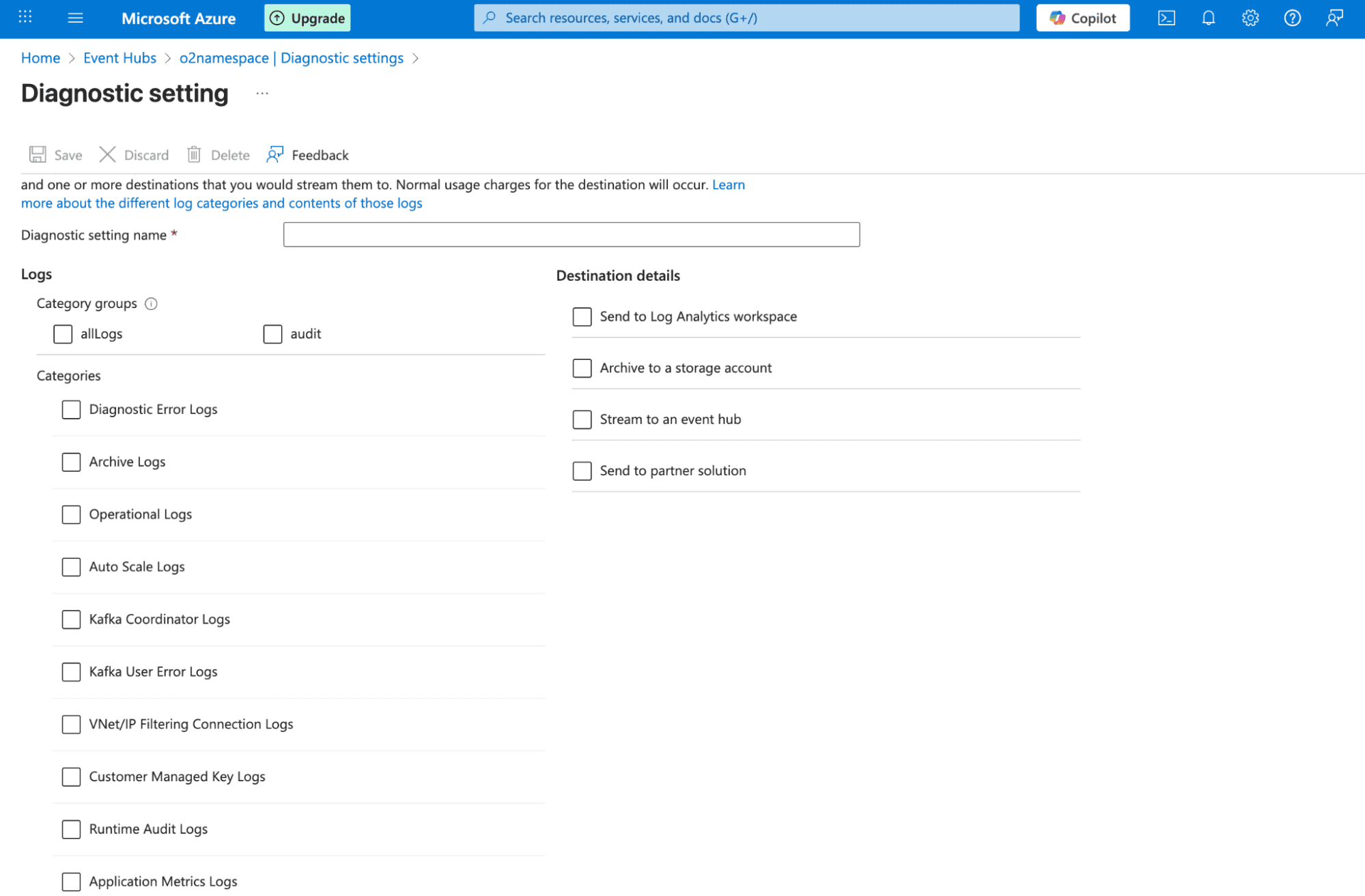

The good news is that every Azure resource : VM, database, storage, load balancer, anything , already exposes its telemetry through the same Diagnostic Settings pipeline.

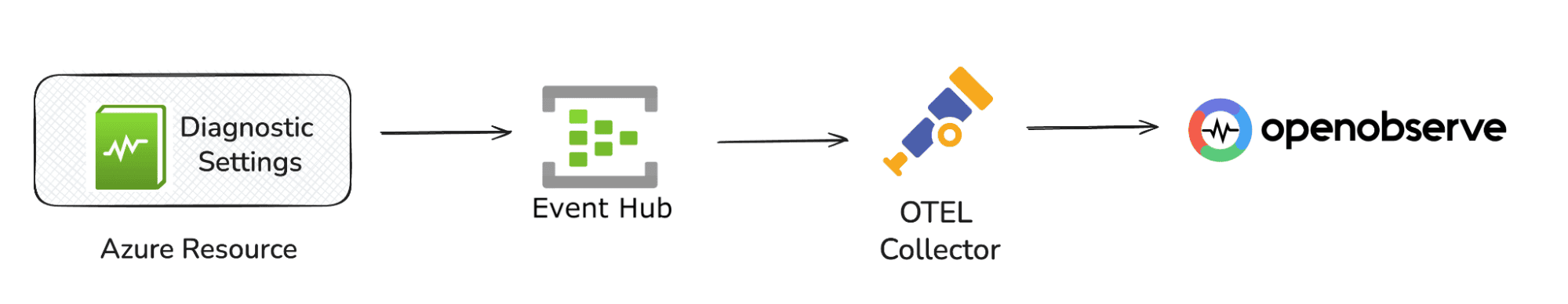

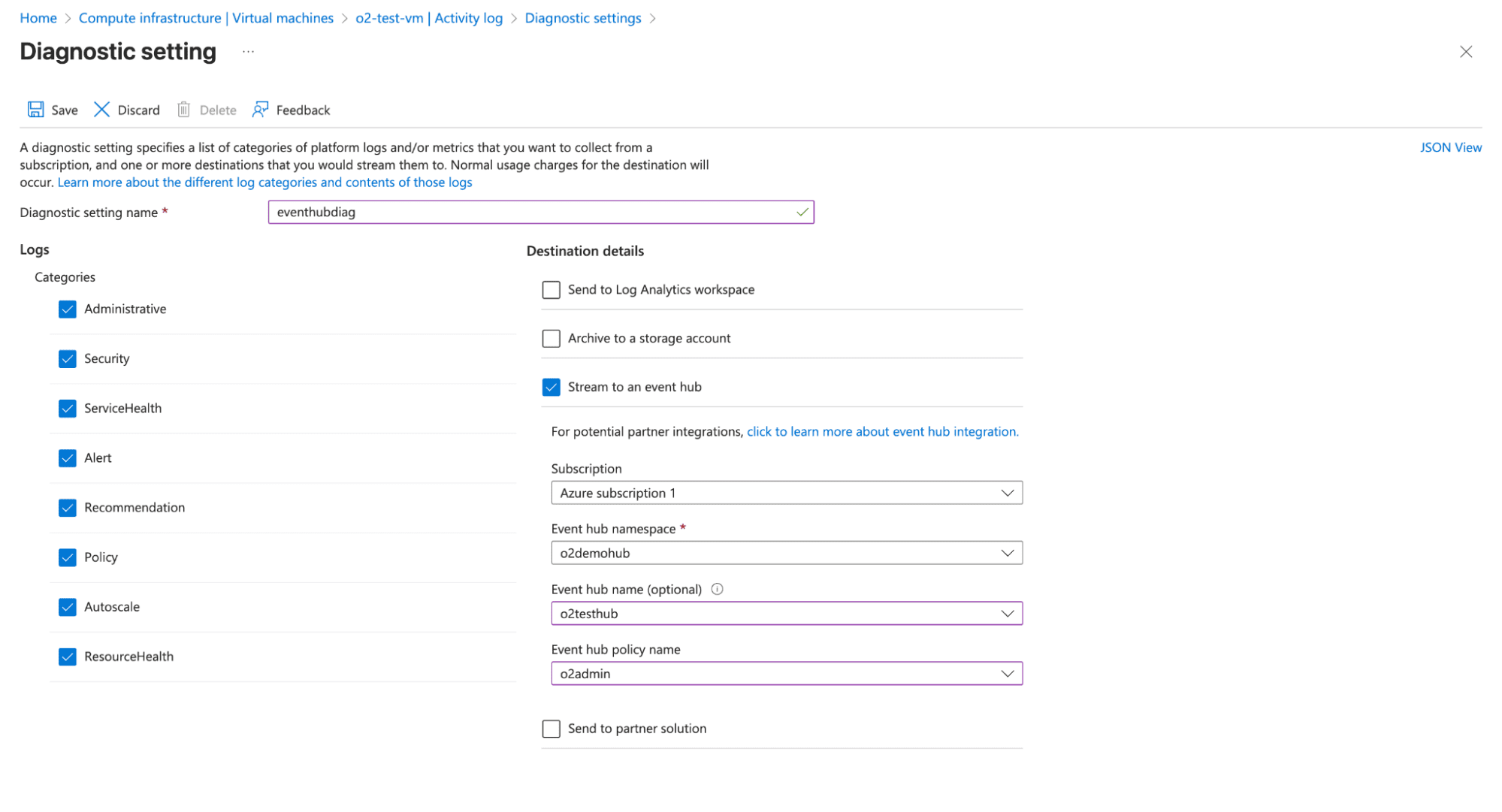

You can configure the Diagnostic Setting to ensure Azure pushes required logs and metrics into an Event Hub, the OpenTelemetry Collector reads from that Event Hub using the azureeventhub receiver, and OpenObserve ingests everything through OTLP.

That’s it. No per-resource agents, no service-specific exporters, no custom hacks.

The pipeline looks like this:

Note: Diagnostic Settings don’t capture everything. For example, VMs don’t stream in-guest OS logs by default, databases may only expose certain engine logs, and some metrics require additional agents or higher-tier monitoring. Always check the categories available in each resource’s Diagnostic Settings to know what you can actually collect.

Before starting, ensure you have:

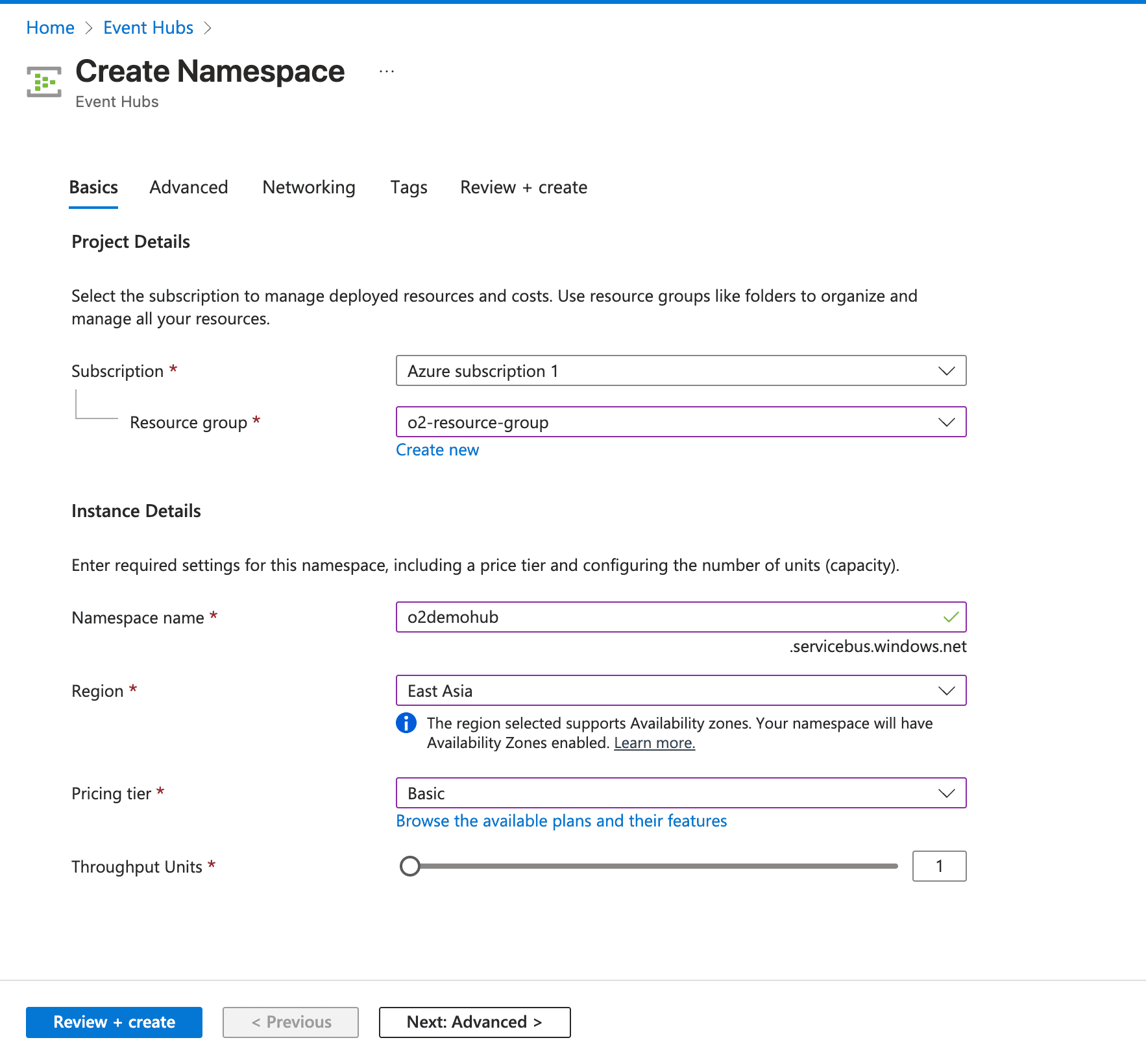

Set up an Event Hub to receive telemetry from all your Azure resources.

Create an Event Hub Namespace:

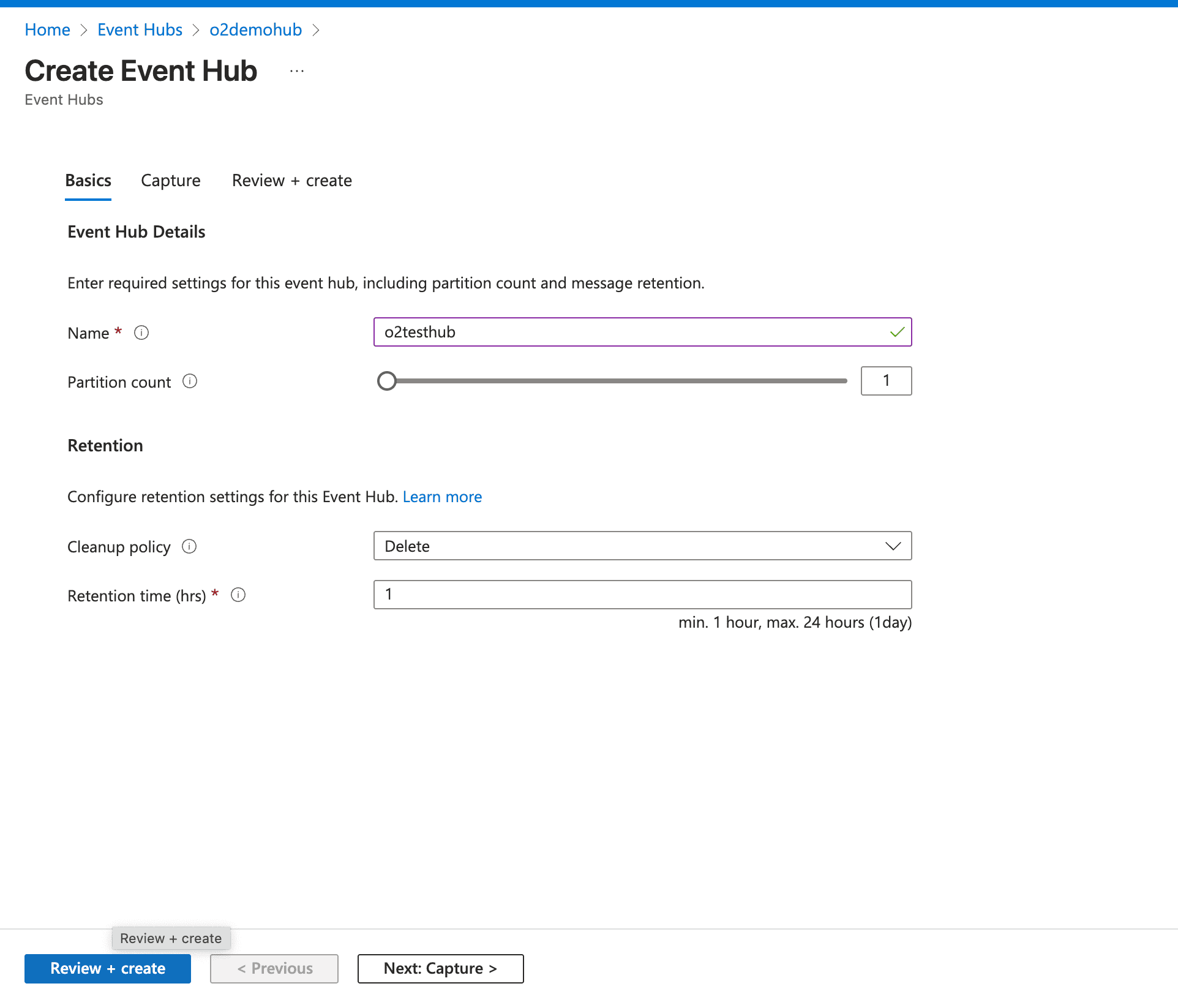

Add an Event Hub:

o2) and leave default settings for Partition Count and Message Retention.

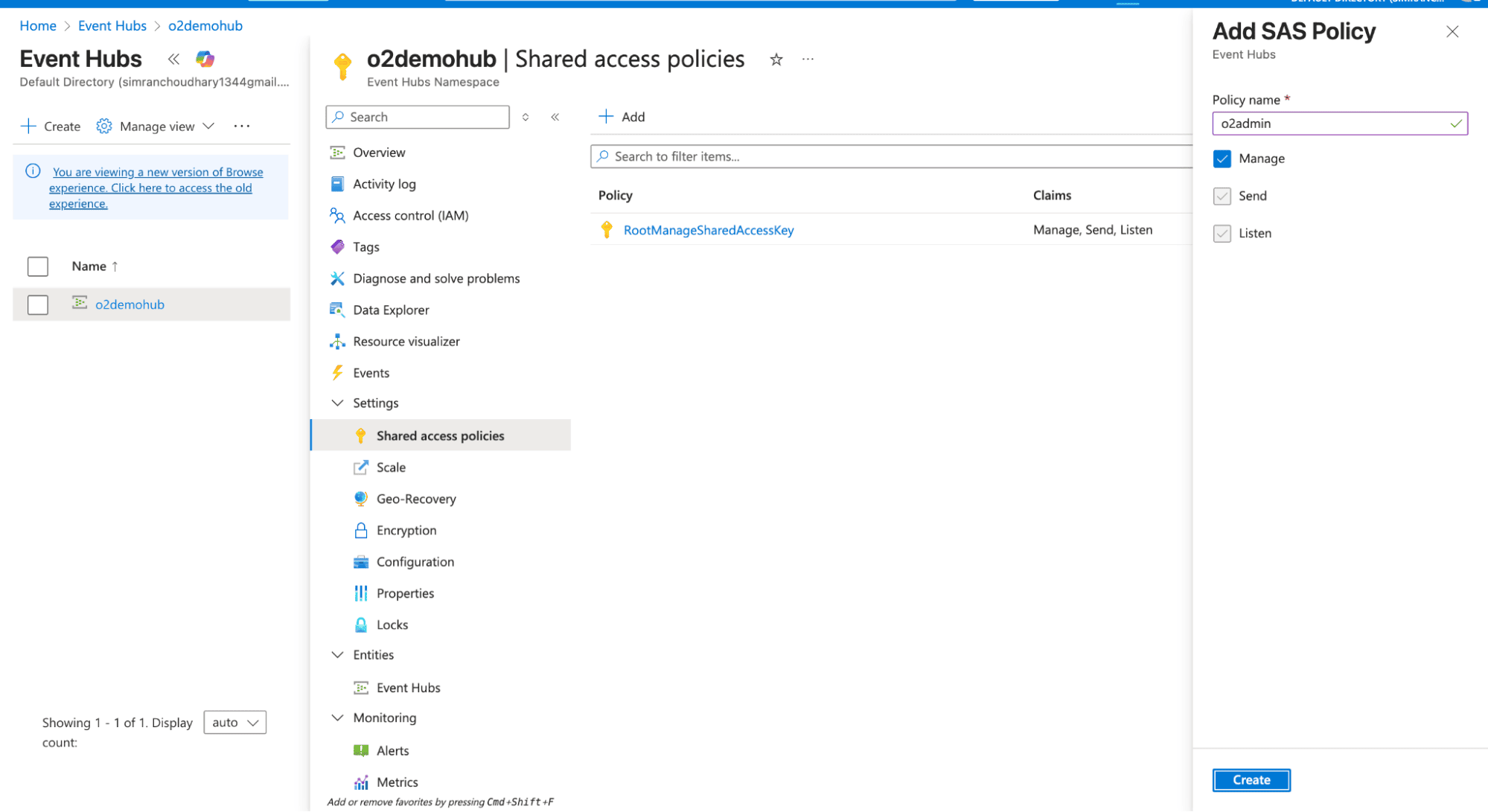

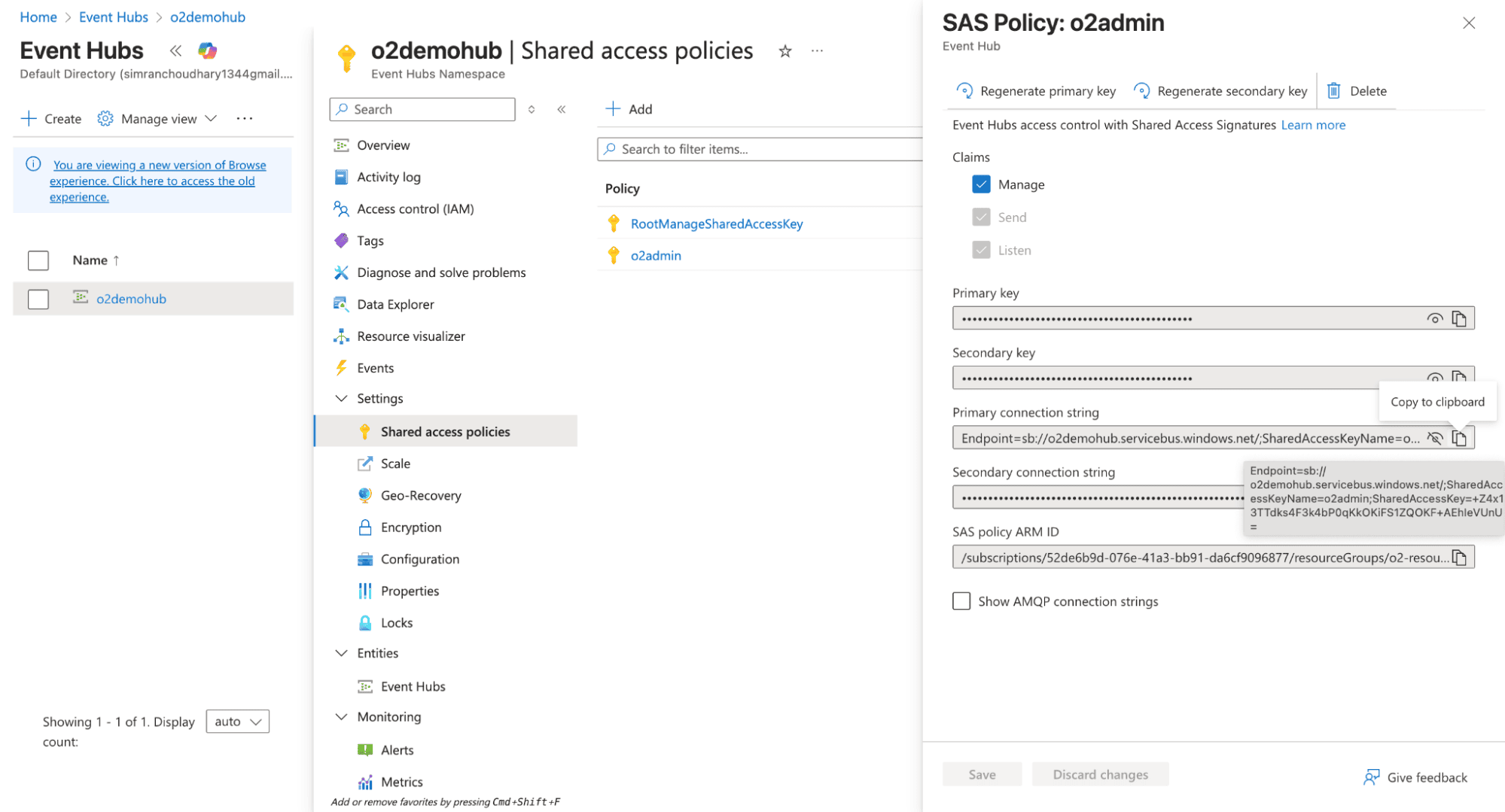

Create a Shared Access Policy:

You need the OTel Collector to pull the data from EventHub and push it to OpenObserve. You can run the collector anywhere: locally, on an Azure VM, or inside a container, as long as it can reach the Event Hub.

Note: For running OpenTelemetry (OTel) Collector, it is recommended to deploy it within a container rather than directly on a VM. When ingesting data from Microsoft sources, the volume can be high, and running OTel on a VM requires more maintenance to handle the load efficiently.

Download the Collector (Binary)

# Download latest contrib build

wget https://github.com/open-telemetry/opentelemetry-collector- releases/releases/latest/download/otelcol-contrib-linux-amd64.tar.gz

# Extract

tar -xvzf otelcol-contrib-linux-amd64.tar.gz

cd otelcol-contrib

This gives you the otelcol-contrib binary you can run directly.

Note: Use the contrib build, the regular OTel Collector doesn’t support these receivers.

2. Create a configuration file azure-to-o2.yaml:

receivers:

azureeventhub:

connection: "${AZURE_EVENTHUB_CONNECTION_STRING}"

exporters:

otlphttp/openobserve:

endpoint: "https://api.openobserve.ai/api/<orgid>"

headers:

Authorization: "Bearer <token>"

stream-name: <log-stream-name>

service:

pipelines:

logs:

receivers: [azureeventhub]

exporters: [otlphttp/openobserve]

metrics:

receivers: [azureeventhub]

exporters: [otlphttp/openobserve]

Sample connection string:

Endpoint=sb://o2namespace.servicebus.windows.net/;SharedAccessKeyName=o2reader;SharedAccessKey=1Tvmx0n39jahswkjeuy3VVc2S+AEhNXacWs=;EntityPath=o2eventhub

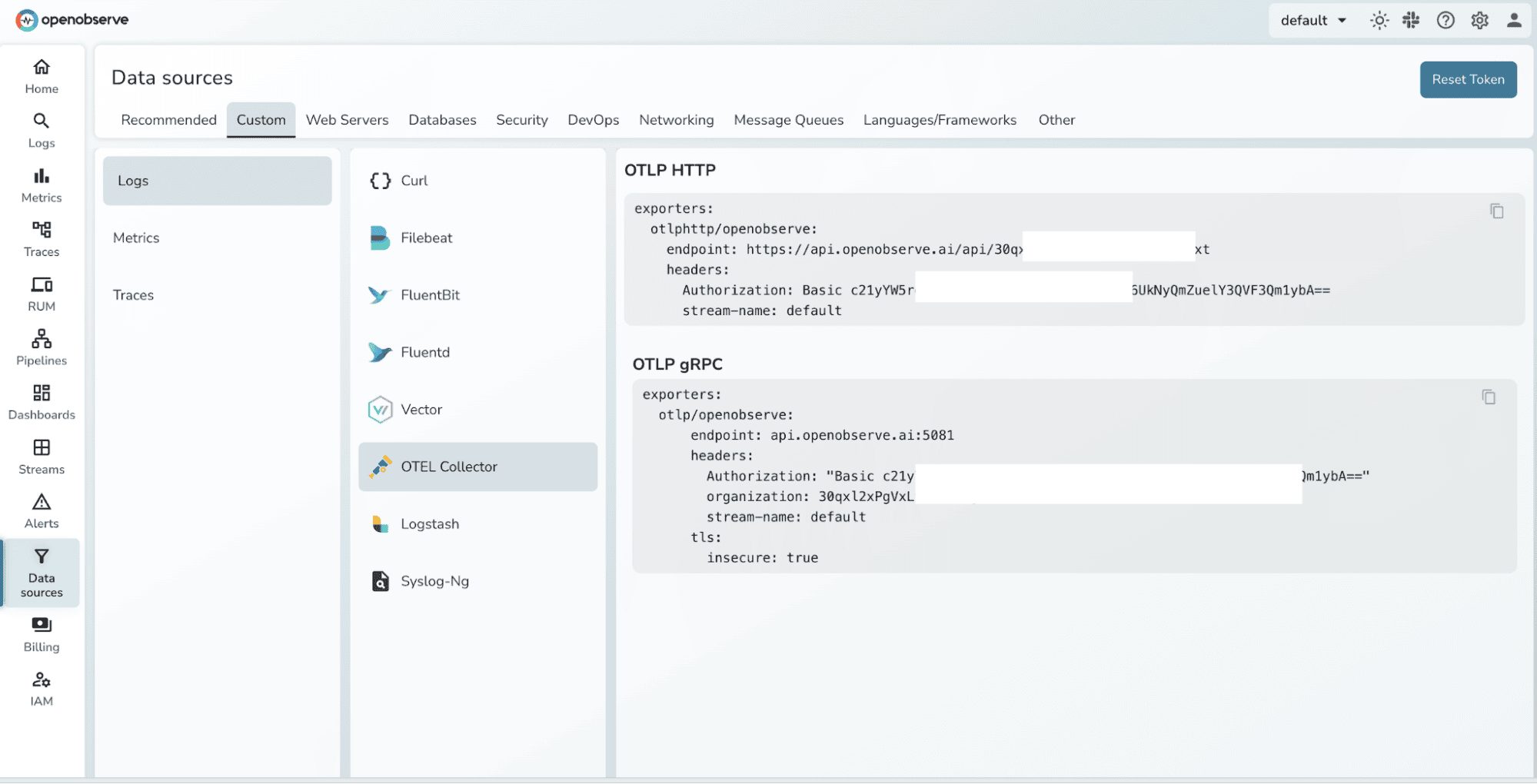

You can fetch the endpoint and openobserve credentials from OpenObserve Data Sources page:

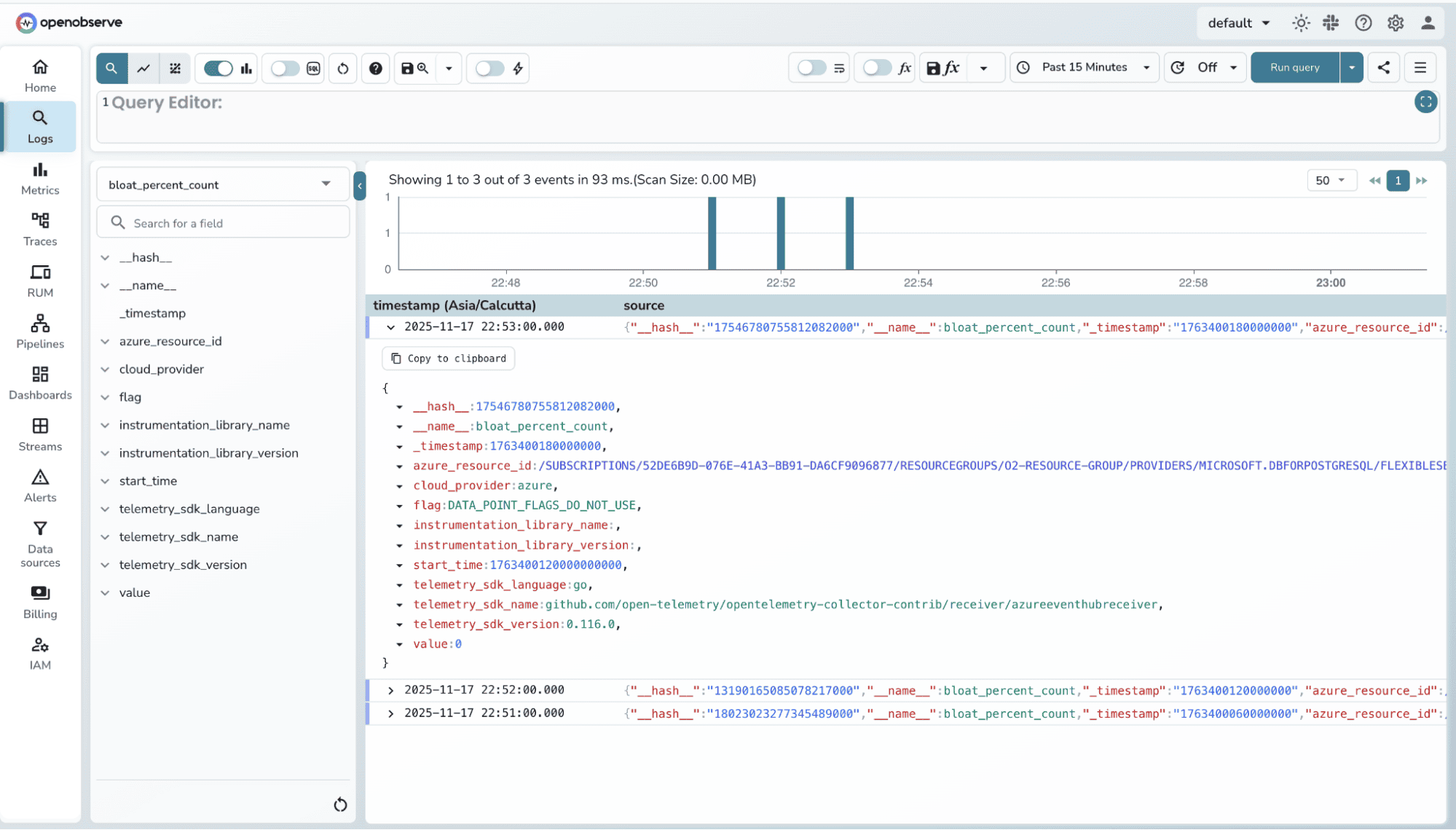

NOTE: For metric names, whatever Azure sends via Event Hub is what lands in OpenObserve, the Collector does not rename them by default unless you add a metric transform.

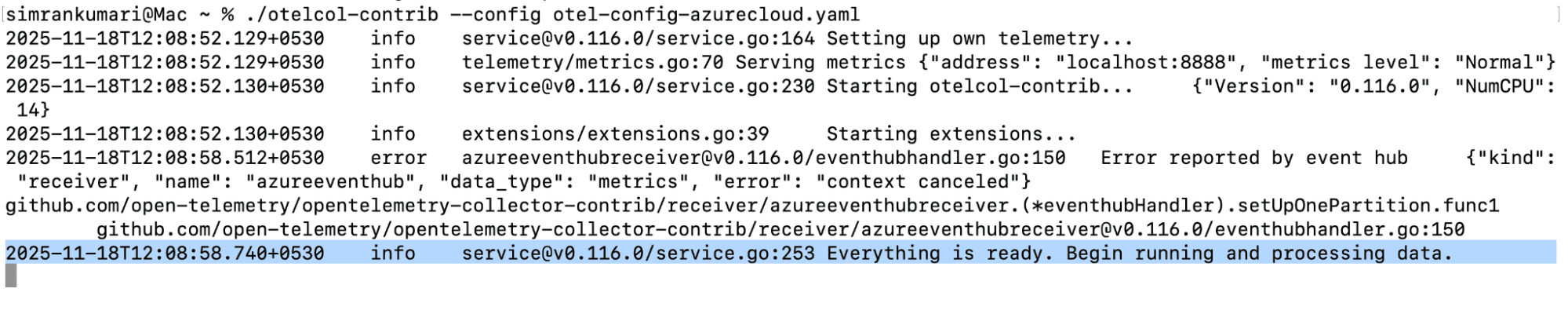

3. Run the otel-collector :

./otelcol-contrib --config azure-to-o2.yaml

Enable the Azure resources to stream logs and metrics to the Event Hub.

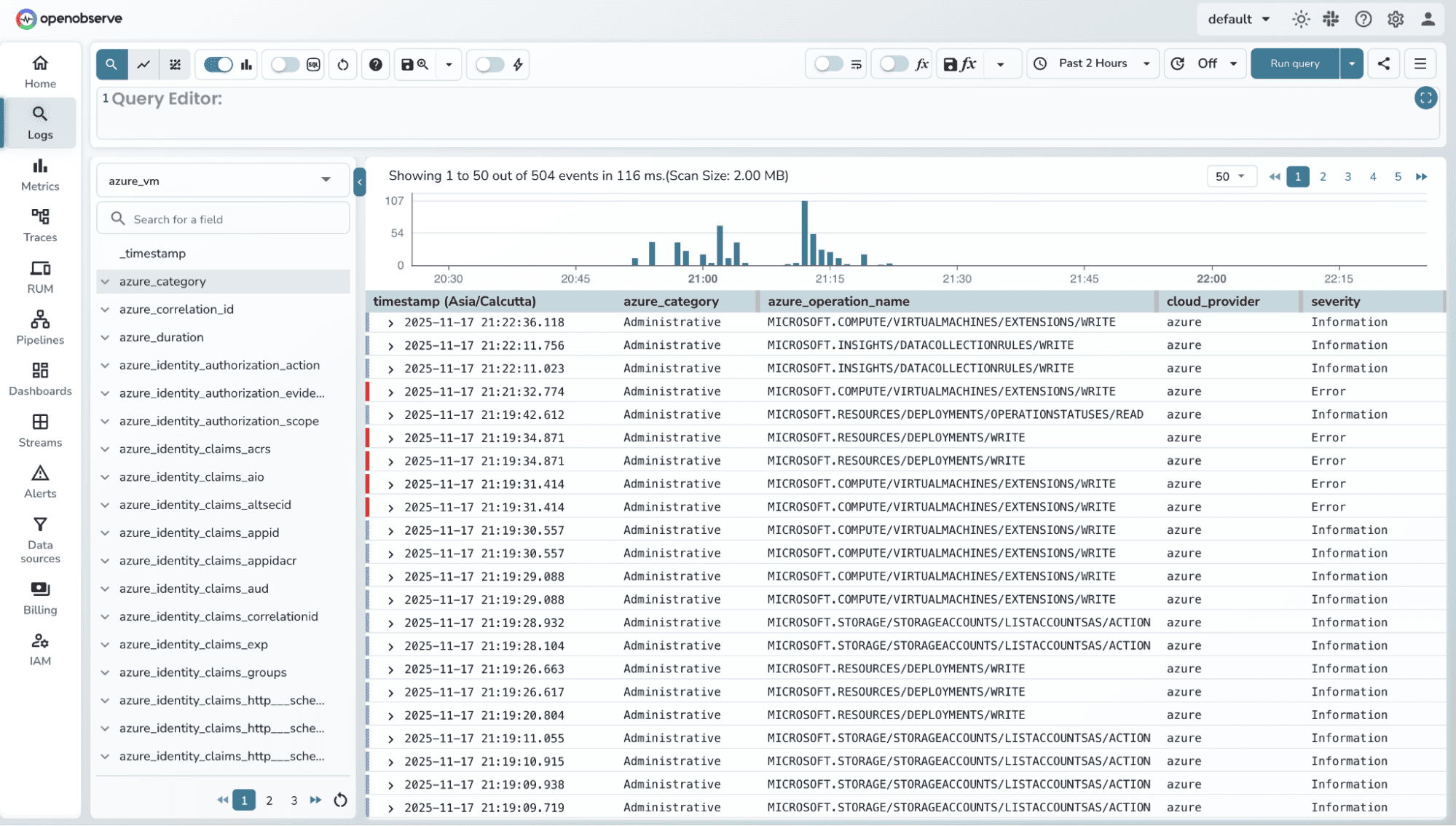

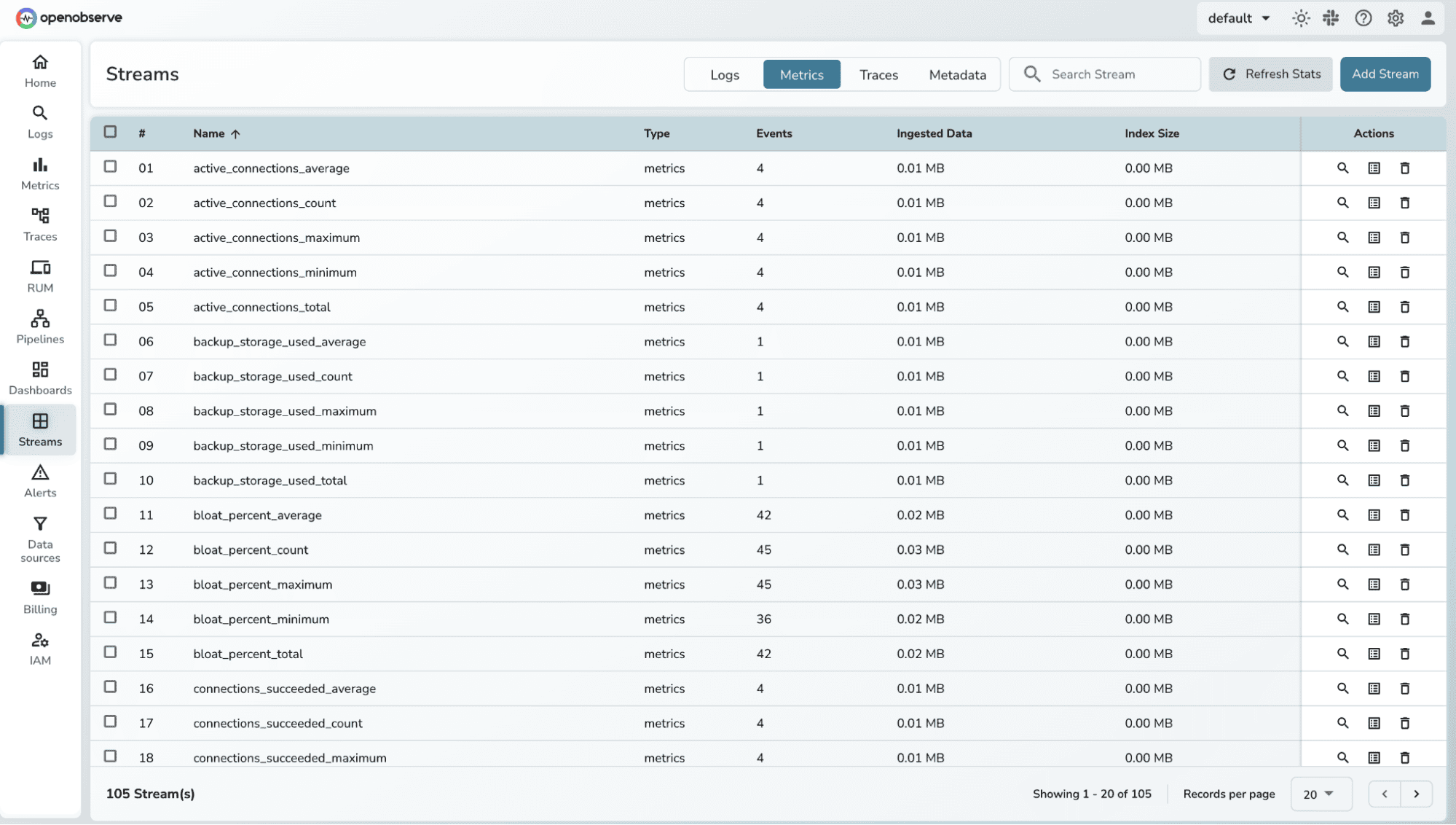

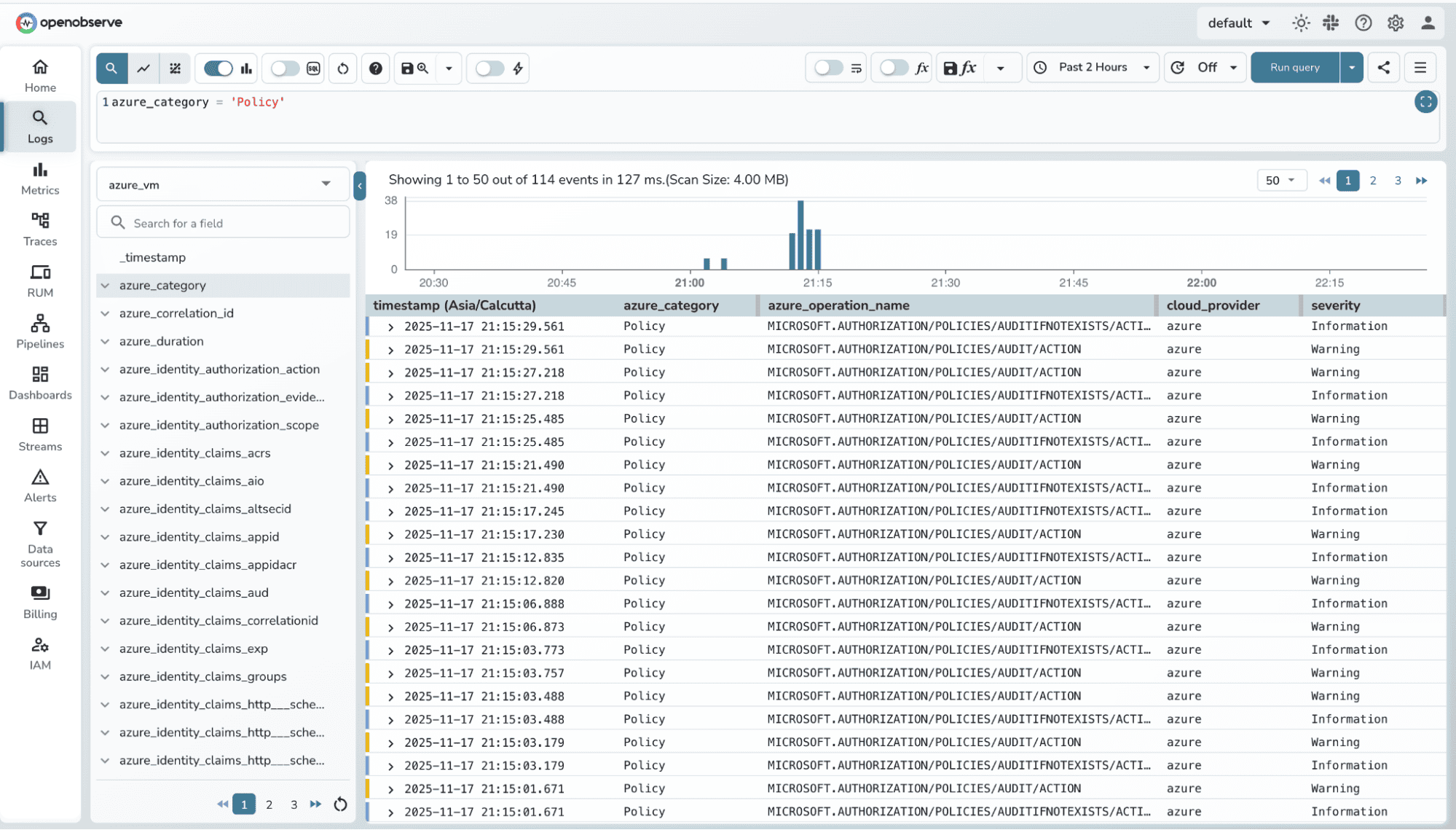

Confirm logs and metrics are arriving correctly in OpenObserve.

You can filter logs using SQL Queries. Refer to the Log searching and Filtering guide for more details.

You can filter logs using SQL Queries. Refer to the Log searching and Filtering guide for more details.

Even with a clean pipeline, a few things can go wrong. Here’s how to quickly identify and fix them:

azure-to-o2.yaml match your Event Hub.otelcol-contrib binary has execute permissions (chmod +x otelcol-contrib).glibc or network connectivity to Event Hub).logFormat / metrics sections. Azure Event Hub receiver only supports azure or raw for logs; metrics require structured categories.Tip: Always start small, verify one resource first, then scale to multiple resources. This helps isolate configuration issues quickly.

Monitoring Azure doesn’t have to be fragmented. With a generic pipeline : Diagnostic Settings → Event Hub → OTel Collector → OpenObserve , you can collect logs and metrics from any resource without extra agents or custom exporters.

Key points to remember:

With this approach, you get a centralized, consistent, and reusable observability pipeline for all your Azure resources , making monitoring simpler, faster, and actionable.

Once your Azure resources are streaming into OpenObserve, you can level up your monitoring:

Build Dashboards Visualize logs and metrics across VMs, databases, storage, and networking in one place. Focus on high-value signals like CPU spikes, slow queries, errors, or dropped packets.

Set Up Alerts Use OpenObserve SQL-based alerts to catch anomalies, errors, or threshold breaches in real time. Example: alert when database connection count exceeds a limit or VM disk I/O is saturated.

Aggregate & Correlate Across Resources Combine logs and metrics from multiple resources to get a single-pane-of-glass view.

Automate Scaling & Response Use the metrics and alerts to automate autoscaling or trigger incident workflows in your tooling.

Expand to More Resources Once the pipeline works for VMs and databases, add storage accounts, AKS, Event Hubs, Key Vaults , the pipeline is generic, so onboarding is straightforward.